RSS-канал «Planet Gentoo»

Доступ к архиву новостей RSS-канала возможен только после подписки.

Как подписчик, вы получите в своё распоряжение бесплатный веб-агрегатор новостей доступный с любого компьютера в котором сможете просматривать и группировать каналы на свой вкус. А, так же, указывать какие из каналов вы захотите читать на вебе, а какие получать по электронной почте.

Подписаться на другой RSS-канал, зная только его адрес или адрес сайта.

Код формы подписки на этот канал для вашего сайта:

Последние новости

Gentoo Linux becomes an SPI associated project

2024-04-10 08:00 GentooNews (https://www.gentoo.org/feeds/news.xml)

As of this March, Gentoo Linux has become an Associated Project of Software in the Public Interest, see also the formal invitation by the Board of Directors of SPI. Software in the Public Interest (SPI) is a non-profit corporation founded to act as a fiscal sponsor for organizations that develop open source software and hardware. It provides services such as accepting donations, holding funds and assets, … SPI qualifies for 501(c)(3) (U.S. non-profit organization) status. This means that all donations made to SPI and its supported projects are tax deductible for donors in the United States. Read on for more details…

Questions & Answers

Why become an SPI Associated Project?

Gentoo Linux, as a collective of software developers, is pretty good at being a Linux distribution. However, becoming a US federal non-profit organization would increase the non-technical workload.

The current Gentoo Foundation has bylaws restricting its behavior to that of a non-profit, is a recognized non-profit only in New Mexico, but a for-profit entity at the US federal level. A direct conversion to a federally recognized non-profit would be unlikely to succeed without significant effort and cost.

Finding Gentoo Foundation trustees to take care of the non-technical work is an ongoing challenge. Robin Johnson (robbat2), our current Gentoo Foundation treasurer, spent a huge amount of time and effort with getting bookkeeping and taxes in order after the prior treasurers lost interest and retired from Gentoo.

For these reasons, Gentoo is moving the non-technical organization overhead to Software in the Public Interest (SPI). As noted above, SPI is already now recognized at US federal level as a full-fleged non-profit 501(c)(3). It also handles several projects of similar type and size (e.g., Arch and Debian) and as such has exactly the experience and background that Gentoo needs.

What are the advantages of becoming an SPI Associated Project in detail?

Financial benefits to donors:

- tax deductions [1]

Financial benefits to Gentoo:

- matching fund programs [2]

- reduced organizational complexity

- reduced administration costs [3]

- reduced taxes [4]

- reduced fees [5]

- increased access to non-profit-only sponsorship [6]

Non-financial benefits to Gentoo:

- reduced organizational complexity, no “double-headed beast” any more

- less non-technical work required

[1] Presently, almost no donations to the Gentoo Foundation provide a tax benefit for donors anywhere in the world. Becoming a SPI Associated Project enables tax benefits for donors located in the USA. Some other countries do recognize donations made to non-profits in other jurisdictions and provide similar tax credits.

[2] This also depends on jurisdictions and local tax laws of the donor, and is often tied to tax deductions.

[3] The Gentoo Foundation currently pays $1500/year in tax preparation costs.

[4] In recent fiscal years, through careful budgetary planning on the part of the Treasurer and advice of tax professionals, the Gentoo Foundation has used depreciation expenses to offset taxes owing; however, this is not a sustainable strategy.

[5] Non-profits are eligible for reduced fees, e.g., of Paypal (savings of 0.9-1.29% per donation) and other services.

[6] Some sponsorship programs are only available to verified 501(c)(3) organizations

Can I still donate to Gentoo, and how?

Yes, of course, and please do so! For the start, you can go to SPI’s Gentoo page and scroll down to the Paypal and Click&Pledge donation links. More information and more ways will be set up soon. Keep in mind, donations to Gentoo via SPI are tax-deductible in the US!

In time, Gentoo will contact existing recurring donors, to aid transitions to SPI’s donation systems.

What will happen to the Gentoo Foundation?

Our intention is to eventually transfer the existing assets to SPI and dissolve the Gentoo Foundation. The precise steps needed on the way to this objective are still under discussion.

Does this affect in any way the European Gentoo e.V.?

No. Förderverein Gentoo e.V. will continue to exist independently. It is also recognized to serve public-benefit purposes (§ 52 Fiscal Code of Germany), meaning that donations are tax-deductible in the E.U.

The interpersonal side of the xz-utils compromise

2024-04-01 17:54 dilfridge (dilfridge)

While everyone is busy analyzing the highly complex technical details of the recently discovered xz-utils compromise that is currently rocking the internet, it is worth looking at the underlying non-technical problems that make such a compromise possible. A very good write-up can be found on the blog of Rob Mensching...

"A Microcosm of the interactions in Open Source projects"

Optimizing parallel extension builds in PEP517 builds

2024-03-15 18:41 mgorny (mgorny)

The distutils (and therefore setuptools) build system supports building C extensions in parallel, through the use of -j (--parallel) option, passed either to build_ext or build command. Gentoo distutils-r1.eclass has always passed these options to speed up builds of packages that feature multiple C files.

However, the switch to PEP517 build backend made this problematic. While the backend uses the respective commands internally, it doesn’t provide a way to pass options to them. In this post, I’d like to explore the different ways we attempted to resolve this problem, trying to find an optimal solution that would let us benefit from parallel extension builds while preserving minimal overhead for packages that wouldn’t benefit from it (e.g. pure Python packages). I will also include a fresh benchmark results to compare these methods.

The history

The legacy build mode utilized two ebuild phases: the compile phase during which the build command was invoked, and the install phase during which install command was invoked. An explicit command invocation made it possible to simply pass the -j option.

When we initially implemented the PEP517 mode, we simply continued calling esetup.py build, prior to calling the PEP517 backend. The former call built all the extensions in parallel, and the latter simply reused the existing build directory.

This was a bit ugly, but it worked most of the time. However, it suffered from a significant overhead from calling the build command. This meant significantly slower builds in the vast majority of packages that did not feature multiple C source files that could benefit from parallel builds.

The next optimization was to replace the build command invocation with more specific build_ext. While the former also involved copying all .py files to the build directory, the latter only built C extensions — and therefore could be pretty much a no-op if there were none. As a side effect, we’ve started hitting rare bugs when custom setup.py scripts assumed that build_ext is never called directly. For a relatively recent example, there is my pull request to fix build_ext -j… in pyzmq.

I’ve followed this immediately with another optimization: skipping the call if there were no source files. To be honest, the code started looking messy at this point, but it was an optimization nevertheless. For the no-extension case, the overhead of calling esetup.py build_ext was replaced by the overhead of calling find to scan the source tree. Of course, this still had some risk of false positives and false negatives.

The next optimization was to call build_ext only if there were at least two files to compile. This mostly addressed the added overhead for packages building only one C file — but of course it couldn’t resolve all false positives.

One more optimization was to make the call conditional to DISTUTILS_EXT variable. While the variable was introduced for another reason (to control adding debug flag), it provided a nice solution to avoid both most of the false positives (even if they were extremely rare) and the overhead of calling find.

The last step wasn’t mine. It was Eli Schwartz’s patch to pass build options via DIST_EXTRA_CONFIG. This provided the ultimate optimization — instead of trying to hack a build_ext call around, we were finally able to pass the necessary options to the PEP517 backend. Needless to say, it meant not only no false positives and no false negatives, but it effectively almost eliminated the overhead in all cases (except for the cost of writing the configuration file).

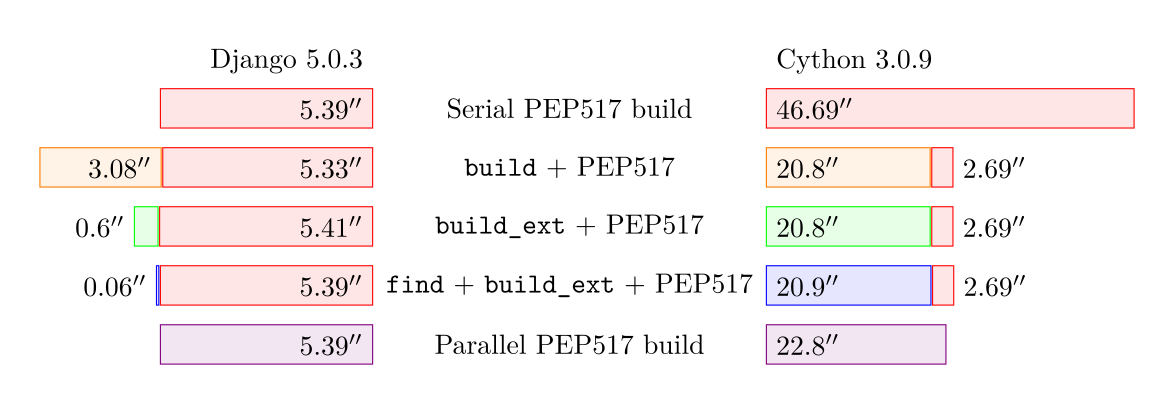

The timings

| Django 5.0.3 | Cython 3.0.9 | ||||

|---|---|---|---|---|---|

| Serial PEP517 build | 5.4 s | 46.7 s | |||

| build | total | 3.1 s | 8.4 s | 20.8 s | 23.5 s |

| PEP517 | 5.3 s | 2.7 s | |||

| build_ext | total | 0.6 s | 6 s | 20.8 s | 23.5 s |

| PEP517 | 5.4 s | 2.7 s | |||

| find + build_ext | total | 0.06 s | 5.5 s | 20.9 s | 23.6 s |

| PEP517 | 5.4 s | 2.7 s | |||

| Parallel PEP517 build | 5.4 s | 22.8 s | |||

For a pure Python package (django here), the table clearly shows how successive iterations have reduced the overhead from parallel build supports, from roughly 3 seconds in the earliest approach, resulting in 8.4 s total build time, to the same 5.4 s as the regular PEP517 build.

For Cython, all but the ultimate solution result in roughly 23.5 s total, half of the time needed for a serial build (46.7 s). The ultimate solution saves another 0.8 s on the double invocation overhead, giving the final result of 22.8 s.

Test data and methodology

The methods were tested against two packages:

- Django 5.0.3, representing a moderate size pure Python package, and

- Cython 3.0.9, representing a package with a moderate number of C extensions.

Python 3.12.2_p1 was used for testing. The timings were done using time command from bash. The results were averaged from 5 warm cache test runs. Testing was done on AMD Ryzen 5 3600, with pstates boost disabled.

The PEP517 builds were performed using the following command:

python3.12 -m build -nwx

The remaining commands and conditions were copied from the eclass. The test scripts, along with the results, spreadsheet and plot source can be found in the distutils-build-bench repository.

Gentoo RISC-V Image for the Allwinner Nezha D1

2024-02-23 03:00 flow (flow)

Motivation

The Allwinner Nezha D1 SoC was one of the first available RISC-V single-board computers (SBC) crowdfounded and released in 2021. According to the manufacturer, “it is the world’s first mass-produced development board that supports 64bit RISC-V instruction set and Linux system.”.

Installing Gentoo on this system usually involved grabbing one existing image, like the Fedora one, and swapping the userland with a Gentoo stage3.

Bootstrapping via a third-party image is now no longer necessary.

A Gentoo RISC-V Image for the Nezha D1

I have uploaded a, for now, experimental Gentoo RISCV-V Image for the Nezha D1 at

https://dev.gentoo.org/~flow/gymage/

Simply dd(rescue) the image onto a SD-Card and plug that card into your board.

Now, you could either connect to the UART or plug in a Ethernet cable to get to a login prompt.

UART

You typically want to connect a USB-to-UART adapter to the board. Unlike other SBCs, the debug UART on the Nezha D1 is clearly labeled with GND, RX, and TX. Using the standard ThunderFly color scheme, this resolves to black for ground (GND), green for RX, and white for TX.

Then fire up your favorite serial terminal

and power on the board.

Note: Your milleage may vary. For example, you probably want your user to be a member of the ‘dialout’ group to access

the serial port. The device name of your USB-to-UART adapter may not be /dev/ttyUSB0.

SSH

Ethernet port of the board is configured to use DHCP for network configuration. A SSH daemon is listening on port 22.

Login

The image comes with a ‘root’ user whose password is set to ‘root’. Note that you should change this password as soon as possible.

gymage

The image was created using the gymage tool.

I envision the gymage to become an easy-to-use tool that allows users to create up-to-date Gentoo images for single-board computers. The tool is in an early stage with some open questions. However, you are free to try it. The source code of gymage is hosted at https://gitlab.com/flow/gymage, and feedback is, as always, appreciated.

Stay tuned for another blog post about gymage once it matures further.

Gentoo x86-64-v3 binary packages available

2024-02-04 09:00 GentooNews (https://www.gentoo.org/feeds/news.xml)

End of December 2023 we already made our official announcement of binary Gentoo package hosting. The initial package set for amd64 was and is base-line x86-64, i.e., it should work on any 64bit Intel or AMD machine. Now, we are happy to announce that there is also a separate package set using the extended x86-64-v3 ISA (i.e., microarchitecture level) available for the same software. If your hardware supports it, use it and enjoy the speed-up! Read on for more details…

Questions & Answers

How can I check if my machine supports x86-64-v3?

The easiest way to do this is to use glibc’s dynamic linker:

larry@noumea ~ $ ld.so --help

Usage: ld.so [OPTION]... EXECUTABLE-FILE [ARGS-FOR-PROGRAM...]

You have invoked 'ld.so', the program interpreter for dynamically-linked

ELF programs. Usually, the program interpreter is invoked automatically

when a dynamically-linked executable is started.

[...]

[...]

Subdirectories of glibc-hwcaps directories, in priority order:

x86-64-v4

x86-64-v3 (supported, searched)

x86-64-v2 (supported, searched)

larry@noumea ~ $

As you can see, this laptop supports x86-64-v2 and x86-64-v3, but not x86-64-v4.

How do I use the new x86-64-v3 packages?

On your amd64 machine, edit the configuration file in /etc/portage/binrepos.conf/

that defines the URI from where the packages are downloaded, and replace x86-64 with

x86-64-v3. E.g., if you have so far

sync-uri = https://distfiles.gentoo.org/releases/amd64/binpackages/17.1/x86-64/

then you change the URI to

sync-uri = https://distfiles.gentoo.org/releases/amd64/binpackages/17.1/x86-64-v3/

That’s all.

Why don’t you have x86-64-v4 packages?

There’s not yet enough hardware and people out there that could use them.

We could start building such packages at any time (our build host is new and shiny), but for now we recommend you build from source and use your own CFLAGS then. After all, if your machine supports x86-64-v4, it’s definitely fast…

Why is there recently so much noise about x86-64-v3 support in Linux distros?

Beats us. The ISA is 9 years old (just the tag x86-64-v3 was slapped onto it recently), so you’d think binaries would have been generated by now. With Gentoo you could’ve done (and probably have done) it all the time.

That said, in some processor lines (i.e. Atom), support for this instruction set was introduced rather late (2021).

Gentoo goes Binary!

2023-12-29 09:00 GentooNews (https://www.gentoo.org/feeds/news.xml)

You probably all know Gentoo Linux as your favourite source-based distribution. Did you know that our package manager, Portage, already for years also has support for binary packages, and that source- and binary-based package installations can be freely mixed?

To speed up working with slow hardware and for overall convenience, we’re now also offering binary packages for download and direct installation! For most architectures, this is limited to the core system and weekly updates - not so for amd64 and arm64 however. There we’ve got a stunning >20 GByte of packages on our mirrors, from LibreOffice to KDE Plasma and from Gnome to Docker. Gentoo stable, updated daily. Enjoy! And read on for more details!

Questions & Answers

How can I set up my existing Gentoo installation to use these packages?

Quick setup instructions for

the most common cases can be found in our wiki. In short, you need to create a configuration

file in /etc/portage/binrepos.conf/.

In addition, we have a rather neat binary package guide on our Wiki that goes into much more detail.

What do I have to do with a new stage / new installation?

New stages already contain the suitable /etc/portage/binrepos.conf/gentoobinhost.conf. You are

good to go from the start, although you may want to replace the src-uri

setting in there with an URI pointing to the corresponding directory on a

local mirror.

$ emerge -uDNavg @world

What compile settings, use flags, … do the ‘‘normal’’ amd64 packages use?

The binary packages under amd64/binpackages/17.1/x86-64 are compiled using

CFLAGS="-march=x86-64 -mtune=generic -O2 -pipe" and will work with any amd64 / x86-64 machine.

The available useflag settings and versions correspond to the stable packages

of the amd64/17.1/nomultilib (i.e., openrc), amd64/17.1/desktop/plasma/systemd,

and amd64/17.1/desktop/gnome/systemd profiles. This should provide fairly large

coverage.

What compile settings, use flags, … do the ‘‘normal’’ arm64 packages use?

The binary packages under arm64/binpackages/17.0/arm64 are compiled using

CFLAGS="-O2 -pipe" and will work with any arm64 / AArch64 machine.

The available useflag settings and versions correspond to the stable packages

of the arm64/17.0 (i.e., openrc), arm64/17.0/desktop/plasma/systemd,

and arm64/17.0/desktop/gnome/systemd profiles.

But hey, that’s not optimized for my CPU!

Tough luck. You can still compile packages yourself just as before!

What settings do the packages for other architectures and ABIs use?

The binary package hosting is wired up with the stage builds. Which means, for about every stage there is a binary package hosting which covers (only) the stage contents and settings. There are no further plans to expand coverage for now. But hey, this includes the compiler (gcc or clang) and the whole build toolchain!

Are the packages cryptographically signed?

Yes, with the same key as the stages.

Are the cryptographic signatures verified before installation?

Yes, with one limitation (in the default setting).

Portage knows two binary package formats, XPAK (old) and GPKG (new). Only GPKG supports cryptographic signing. Until recently, XPAK was the default setting (and it may still be the default on your installation since this is not changed during upgrade, but only at new installation).

The new, official Gentoo binary packages are all in GPKG format. GPKG packages have their signature verified, and if this fails, installation is refused. To avoid breaking compatibility with old binary packages, by default XPAK packages (which do not have signatures) can still be installed however.

If you want to require verified signatures (which is something we strongly recommend),

set FEATURES="binpkg-request-signature" in make.conf. Then, obviously, you can also

only use GPKG packages.

I get an error that signatures cannot be verified.

Try running the Gentoo Trust Tool getuto as root.

$ getuto

This should set up the required key ring with the Gentoo Release Engineering keys for Portage.

If you have FEATURES="binpkg-request-signature" enabled in make.conf, then getuto

is called automatically before every binary package download operation, to make sure

that key updates and revocations are imported.

I’ve made binary packages myself and portage refuses to use them now!

Well, you found the side effect of FEATURES="binpkg-request-signature".

For your self-made packages you will need to set up a signing key and have that key

trusted by the anchor in /etc/portage/gnupg.

The binary package guide on our Wiki will be helpful here.

My download is slow.

Then pretty please use a local mirror

instead of downloading from University of Oregon. You can just edit the URI

in your /etc/portage/binrepos.conf. And yes, that’s safe, because of the

cryptographic signature.

My Portage still wants to compile from source.

If you use useflag combinations deviating from the profile default, then you can’t and won’t use the packages. Portage will happily mix and match though and combine binary packages with locally compiled ones. Gentoo still remains a source-based distribution, and we are not aiming for a full binary-only installation without any compilation at all.

Can I use the packages on a merged-usr system?

Yes. (If anything breaks, then this is a bug and should be reported.)

Can I use the packages with other (older or newer) profile versions?

No. That’s why the src-uri path contains, e.g., “17.1”.

When there’s a new profile version, we’ll also provide new, separate package directories.

Any plans to offer binary packages of ~amd64 ?

Not yet. This would mean a ton of rebuilds… If we offer it one day, it’ll be at a separate URI for technical reasons.

The advice for now is to stick to stable as much as possible, and locally

add in package.accept_keywords whatever packages from testing you want to use.

This means you can still use a large amount of binary packages, and just

compile the rest yourself.

I have found a problem, with portage or a specific package!

Then please ask for advice (on IRC, the forums, or a mailing list) and/or file a bug!

Binary package support has been tested for some time, but with many more people using it edge cases will certainly occur, and quality bug reports are always appreciated!

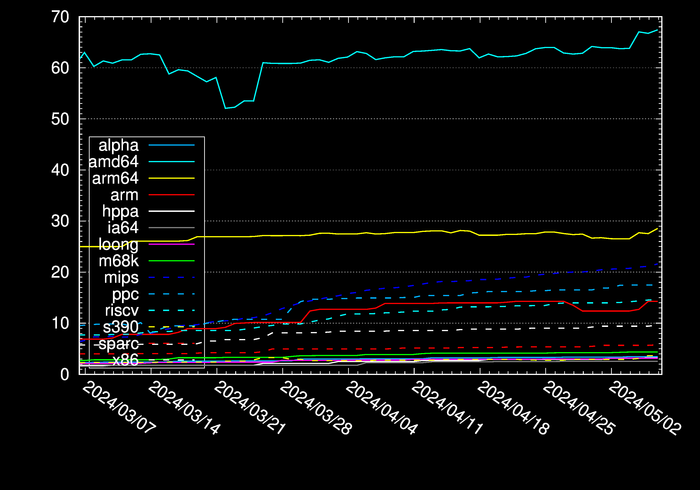

Any pretty pictures?

Of course! Here’s the amount of binary package data in GByte for each architecture…

.NET in Gentoo in 2023

2023-12-18 01:59 xgqt (xgqt)

.NET ecosystem in Gentoo in year 2023

The Gentoo Dotnet project introduced better support for building .NET-based software using the nuget, dotnet-pkg-base and dotnet-pkg eclasses. This opened new opportunities of bringing new packages depending on .NET ecosystem to the official Gentoo ebuild repository and helping developers that use dotnet-sdk on Gentoo.

New software requiring .NET is constantly being added to the main Gentoo tree, among others that is:

- PowerShell for Linux,

- Denaro — finance application,

- Ryujinx — NS emulator,

- OpenRA — RTS engine for Command & Conquer, Red Alert and Dune2k,

- Pinta — graphics program,

- Pablodraw — Ansi, Ascii and RIPscrip art editor,

- Dafny — verification-aware programming language

- many packages aimed straight at developing .NET projects.

Dotnet project is also looking for new maintainers and users who are willing to help out here and there. Current state of .NET in Gentoo is very good but we can still do a lot better.

Special thanks to people who helped out

Links to bugs and announcements

- Bugs

- Github PRs

- Active Gentoo .NET projects

Portage Continuous Delivery

2023-12-17 03:50 xgqt (xgqt)

Portage as a CD system

This is a very simple way to use any system with Portage installed as a Continuous Delivery server.

I think for a testing environment this is a valid solution to consider.

Create a repository of software used in your organization

Those articles from the Gentoo Wiki describe how to create a custom ebuild repository (overlay) pretty well:

Set up your repo with eselect-repository

Install the my-org repository:

1

|

eselect repository add my-org git https://git.my-org.local/portage/my-org.git |

Sync my-org:

1

|

emerge --sync my-org |

Install live packages of a your software

First, enable live packages (keywordless) for your my-org repo:

1

|

echo '*/*::my-org' >> /etc/portage/package.accept_keywords/0000_repo_my-org.conf |

Install some packages from my-org:

1

|

emerge -av "=mycategory/mysoftware-9999" |

Install smart-live-rebuild

smart-live-rebuild can automatically update live software packages that use git as their source URL.

Set up cron to run smart-live-rebuild

Refresh your my-org repository every hour:

1

|

0 */1 * * * emerge --sync my-org |

Refresh the main Gentoo tree every other 6th hour:

1

|

0 */6 * * * emerge --sync gentoo |

Run smart-live-rebuild every other 3rd hour:

1

|

0 */3 * * * smart-live-rebuild |

Restarting services after update

All-in-one script

You can either restart all services after successful update:

File: /opt/update.sh

1 2 3 4 5 6 7 8 |

#!/bin/sh set -e smart-live-rebuild systemctl restart my-service-1.service systemctl restart my-service-2.service |

Crontab:

1

|

0 */3 * * * /opt/update.sh |

Via ebuilds pkg_ functions

File: my-service-1.ebuild

1 2 3 |

pkg_postinst() { systemctl restart my-service-1.service } |

More about pkg_postinst:

Example Gentoo overlays

A format that does one thing well or one-size-fits-all?

2023-12-07 18:58 mgorny (mgorny)

The Unix philosophy states that we ought to design programs that “do one thing well”. Nevertheless, the current trend is to design huge monoliths with multiple unrelated functions, with web browsers at the peak of that horrifying journey. However, let’s consider something else.

Does the same philosophy hold for algorithms and file formats? Is it better to design formats that suit a single use case well, and swap between different formats as need arises? Or perhaps it is a better solution to actually design them so they could fit different needs?

Let’s consider this by exploring three areas: hash algorithms, compressed file formats and image formats.

Hash algorithms

Hash, digest, checksum — they have many names, and many uses. To list a few uses of hash functions and their derivatives:

- verifying file integrity

- verifying file authenticity

- generating derived keys

- generating unique keys for fast data access and comparison

Different use cases imply different requirements. The simple CRC algorithms were good enough to check files for random damage but they aren’t suitable for cryptographic purposes. The SHA hashes provide good resistance to attacks but they are too slow to speed up data lookups. That role is much better served by dedicated fast hashes such as xxHash. In my opinion, these are all examples of “do one thing well”.

On the other hand, there is some overlap. More often than not, cryptographic hash functions are used to verify integrity. Then we have modern hashes like BLAKE2 that are both fast and secure (though not as fast as dedicated fast hashes). Argon2 key derivation function builds upon BLAKE2 to improve its security even further, rather than inventing a new hash. These are the examples how a single tool is used to serve different purposes.

Compressed file formats

The purpose of compression, of course, is to reduce file size. However, individual algorithms may be optimized for different kinds of data and different goals.

Probably the oldest category are “archiving” algorithms that focus on providing strong compression and reasonably fast decompression. Back in the day, there were used to compress files in “cold storage” and for transfer; nowadays, they can be used basically for anything that you don’t modify very frequently. The common algorithms from this category include deflate (used by gzip, zip) and LZMA (used by 7z, lzip, xz).

Then, we have very strong algorithms that achieve remarkable compression at the cost of very slow compression and decompression. These are sometimes (but rarely) used for data distribution. An example of such algorithms are the PAQ family.

Then, we have very fast algorithms such as LZ4. They provide worse compression ratios than other algorithms, but they are so fast that they can be used to compress data on the fly. They can be used to speed up data access and transmission by reducing its size with no noticeable overhead.

Of course, many algorithms have different presets. You can run lz4 -9 to get stronger compression with LZ4, or xz -1 to get faster compression with XZ. However, neither the former will excel at compression ratio, nor the latter at speed.

Again, we are seeing different algorithms that “do one thing well”. However, nowadays ZSTD is gaining popularity and it spans a wider spectrum, being capable of both providing very fast compression (but not as fast as LZ4) and quite strong compression. What’s really important is that it’s capable of providing adaptive compression — that is, dynamically adjusting the compression level to provide the best throughput. It switches to a faster preset if the current one is slowing the transmission down, and to a stronger one if there is a potential speedup in that.

Image formats

Let’s discuss image formats now. If we look back far enough, we’d arrive at a time when two image formats were dominating the web. On one hand, we had GIF — with lossless compression, limited color palette, transparency and animations, that made it a good choice for computer-generated images. On the other, we had JPEG — with efficient lossy compression and high color depth suitable for photography. We could see these two as “doing one thing well”.

Then came PNG. PNG is also lossless but provides much higher color depth and improved support for transparency via an alpha channel. While it’s still the format of choice for computer-generated images, it’s also somewhat suitable for photography (but with less efficient compression). With APNG around, it effectively replaces GIF but it also partially overlaps with the use cases for JPEG.

Modern image formats go even further. WebP, AVIF and JPEG XL all support both lossless and lossy compession, high color depths, alpha channel, animation. Therefore, they are suitable both for computer-generated images and for photography. Effectively, they can replace all their predecessors with a “one size fits all” format.

Conclusion

I’ve asked whether it is better to design formats that focus on one specific use case, or whether formats that try to cover a whole spectrum of use cases are better. I’m afraid there’s no easy answer to this question.

We can clearly see that “one-size-fits-all” solutions are gaining popularity — BLAKE2 among hashes, ZSTD in compressed file formats, WebP, AVIF and JPEG XL among image formats. They have a single clear advantage — you need just one tool, one implementation.

Your web browser needs to support only one format that covers both computer-generated graphics using lossless compression and photographs using lossy compression. Different tools can reuse the same BLAKE2 implementation that’s well tested and audited. A single ZSTD library can serve different applications in their distinct use cases.

However, there is still a clear edge to algorithms that are focused on a single use case. xxHash is still faster than any hashes that could be remotely suitable for cryptographic purposes. LZ4 is still faster than ZSTD can be in its lowest compression mode.

The only reasonable conclusion seems to be: there are use cases for both. There are use cases that are best satisfied by a dedicated algorithm, and there are use cases when a more generic solution is better. There are use cases when integrating two different hash algorithms, two different compression libraries into your program, with the overhead involved, is a better choice, than using just one algorithm that fits neither of your two distinct use cases well.

Once again, it feels that a reference to XKCD#927 is appropriate. However, in this particular instance this isn’t a bad thing.

My thin wrapper for emerge(1)

2023-09-05 20:04 mgorny (mgorny)

I’ve recently written a thin wrapper over emerge that I use in my development environment. It does the following:

- set tmux pane title to the first package argument (so you can roughly see what’s emerging on every pane)

- beep meaningfully when emerge finishes (two beeps for success, three for failure),

- run pip check after successful run to check for mismatched Python dependencies.

Here’s the code:

#!/bin/sh

for arg; do

case ${arg} in

-*)

;;

*)

tmux rename-window "${arg}"

break

;;

esac

done

/usr/bin/emerge "${@}"

ret=${?}

if [ "${ret}" -eq 0 ]; then

python3.11 -m pip check | grep -v certifi

else

tput bel

sleep 0.1

fi

tput bel

sleep 0.1

tput bel

exit "${ret}"

Genpatches Supported Kernel Versions

2023-08-31 20:15 mpagano (mpagano)

As part of a an effort to streamline developer capacity, the maintainers of gentoo-sources and genpatches have decided to limit past kernel versions to a maximum of 3 years post initial release.

Notes:

- This impacts all kernels that utlize the official genpatches releases as part of their kernel packages including but not limited to the list here [1]

- sys-kernel/vanilla-sources will continue to follow the upstream release and deprecation schedule. Note that the upstream release schedule is showing their LTS kernel support time frames going from six years to four [2]

- gentoo-kernel will also be following this 3 year kernel support and release policy

- In the past, we only supported two versions. With this change, gentoo-sources will still, if enacted today, support 6 versions.

Why should I not run older kernel versions?

- Upstream maintainer Greg Kroah-Hartman specifically recommends the following list of preferred kernel versions to choose from in order: [3]

- Supported kernel from your favorite Linux distribution

- Latest stable release

- Latest LTS release

- Older LTS release that is still being maintained

Greg specifically called out Gentoo’s method of consistently rolling out kernels with both security/bug fixes early and keeping up with upstream’s releases.

Gentoo still does and will continue to offer a variety of kernels to choose from with this change.

[1] https://dev.gentoo.org/~mpagano/genpatches/kernels.html

[2] https://kernel.org/category/releases.html

[3] http://www.kroah.com/log/blog/2018/08/24/what-stable-kernel-should-i-use/

Final Report, Automated Gentoo System Updater

2023-08-27 19:28 GSoC (gsoc)

Project Goals

Main goal of the project was to write an app that will automatically handle updates on Gentoo Linux systems and send notifications with update summaries. More specifically, I wanted to:

- Simplify the update process for beginners, offering a simpler one-click method.

- Minimize time experienced users spend on routine update tasks, decreasing their workload.

- Ensure systems remain secure and regularly updated with minimal manual intervention.

- Keep users informed of the updates and changes.

- Improve the overall Gentoo Linux user experience.

Progress

Here is a summary of what was done every week with links to my blog posts.

Week 1

Basic system updater is ready. Also prepared a Docker Compose file to run tests in containers. Available functionality:

- update security patches

- update @world

- merge changed configuration files

- restart updated services

- do a post-update clean up

- read elogs

- read news

Links:

Week 2

Packaged Python code, created an ebuild and a GitHub Actions workflow that publishes package to PyPI when commit is tagged.

Links:

Week 3

Fixed issue #7 and answered to issue #8 and fixed bug 908308. Added USE flags to manage dependencies. Improve Bash code stability.

Links:

Week 4

Fixed errors in ebuild, replaced USE flags with optfeature for dependency management. Wrote a blog post to introduce my app and posted it on forums. Fixed a bug in --args flag.

Links:

Week 5

Received some feedback from forums. Coded much of the parser (--report). Improved container testing environment.

Links:

- Improved dockerfiles

Weeks 6 and 7

Completed parser (--report). Also added disk usage calculation before and after the update. Available functionality:

- If the update was successful, report will show:

- updated package names

- package versions in the format “old -> new”

- USE flags of those packages

- disk usage before and after the update

- If the emerge pretend has failed, report will show:

- error type (for now only supports ‘blocked packages’ error)

- error details (for blocked package it will show problematic packages)

Links:

Week 8

Add 2 notification methods (--send-reports) – IRC bot and emails via sendgrid.

Links:

Week 9-10

Improved CLI argument handling. Experimented with different mobile app UI layouts and backend options. Fixed issue #17. Started working on mobile app UI, decided to use Firebase for backend.

Links:

Week 11-12

Completed mobile app (UI + backend). Created a plan to migrate to a custom self-hosted backend based on Django+MongoDB+Nginx in the future. Added --send-reports mobile option to CLI. Available functionality:

- UI

- Login screen: Anonymous login

- Reports screen: Receive and view reports send from CLI app.

- Profile screen: View token, user ID and Sign Out button.

- Backend

- Create anonymous users (Cloud Functions)

- Create user tokens (Cloud Functions)

- Receive tokens in https requests, verify them, and route to users (Cloud Functions)

- Send push notifications (FCM)

- Secure database access with Firestore security rules

Link:

- Pull requests: #18

- Mobile app repository

Final week

Added token encryption with Cloud Functions. Packaged mobile app with Github Actions and published to Google Play Store. Recorded a demo video and wrote gentoo_update User Guide that covers both CLI and mobile app.

Links:

- Demo video

- gentoo_update User Guide

- Packaging Github Actions workflow

- Google Play link

- Release page

Project Status

I would say I’m very satisfied with the current state of the project. Almost all tasks were completed from the proposal, and there is a product that can already be used. To summarize, here is a list of deliverables:

- Source code for gentoo_update CLI app

- gentoo_update CLI app ebuild in GURU repository

- gentoo_update CLI app package in PyPi

- Source code for mobile app

- Mobile app for Andoid in APK

- Mobile app for Android in Google Play

Future Improvements

I plan to add a lot more features to both CLI and mobile apps. Full feature lists can be found in readme’s of both repositories:

Final Thoughts

These 12 weeks felt like a hackathon, where I had to learn new technologies very quickly and create something that works very fast. I faced many challenges and acquired a range of new skills.

Over the course of this project, I coded both Linux CLI applications using Python and Bash, and mobile apps with Flutter and Firebase. To maintain the quality of my work, I tested the code in Docker containers, virtual machines and physical hardware. Additionally, I built and deployed CI/CD pipelines with GitHub Actions to automate packaging. Beyond the technical side, I engaged actively with Gentoo community, utilizing IRC chats and forums. Through these platforms, I addressed and resolved issues on both GitHub and Gentoo Bugs, enriching my understanding and refining my skills.

I also would like to thank my mentor, Andrey Falko, for all his help and support. I wouldn’t have been able to finish this project without his guidance.

In addition, I want to thank Google for providing such a generous opportunity for open source developers to work on bringing forth innovation.

Lastly, I am grateful to Gentoo community for the feedback that’s helped me to improve the project immensely.

gentoo_update User Guide

2023-08-27 18:15 GSoC (gsoc)

Introduction

This article will go through the basic usage of gentoo_update CLI tool and the mobile app.

But before that, here is a demo of this project:

gentoo_update CLI App

Installation

gentoo_update is available in GURU overlay and in PyPI. Generally, installing the program from GURU overlay is the preferred method, but PyPI will always have the most recent version.

Enable GURU and install with emerge:

eselect repository enable guru

emerge --ask app-admin/gentoo_update

Alternatively, install from PyPI with pip:

python -m venv .venv_gentoo_update

source .venv_gentoo_update/bin/activate

python -m pip install gentoo_updateUpdate

gentoo_update provides 2 update modes – full and security. Full mode updates @world, and security mode uses glsa-check to find security patches, and installs them if something is found.

By default, when run without flags security mode is selected:

gentoo-update

To update @world, run:

gentoo-update --update-mode full

Full list of available parameters and flags can be accessed with the --help flag. Further examples are detailed in the repository’s readme file.

Once the update concludes, a log file gets generated at /var/log/portage/gentoo_update/log_<date> (or whatever $PORTAGE_LOGDIR is set to). This log becomes the basis for the update report when the --report flag is used, transforming the log details into a structured update report.

Send Report

The update report can be sent through three distinct methods: IRC bot, email, or mobile app.

IRC Bot Method

Begin by registering a user on an IRC server and setting a nickname as outlined in the documentation. After establishing a chat channel for notifications, define the necessary environmental variables and execute the following commands:

export IRC_CHANNEL="#<irc_channel_name>"

export IRC_BOT_NICKNAME="<bot_name>"

export IRC_BOT_PASSWORD="<bot_password>"

gentoo-update --send-report irc

Email via Sendgrid

To utilize Sendgrid, register for an account and generate an API key). After installing the Sendgrid Python library from GURU, save the API key in the environmental variables and use the commands below:

emerge --ask dev-python/sendgrid

export SENDGRID_TO='recipient@email.com'

export SENDGRID_FROM='sender@email.com'

export SENDGRID_API_KEY='SG.****************'

gentoo-update --send-report email

Notifications can also be sent via the mobile app. Details on this method will be elaborated in the following section.

gentoo_update Mobile App

Installation

Mobile app can either be installed from Github or Google Play Store.

Play Store

App can be found by searching ‘gentoo_update’ in the Play Store, or by using this link.

Manual Installation

For manual installation on an Android device, download the APK file from

Releases tab on Github. Ensure you’ve enabled installation from Unknown Sources before proceeding.

Usage

The mobile app consists of three screens: Login, Reports, and Profile.

Upon first use, users will see the Login screen. To proceed, select the Anonymous Login button. This action generates an account with a unique user ID and token, essential for the CLI to send reports.

The Reports screen displays all reports sent using a specific token. Each entry shows the update status and report ID. For an in-depth view of any report, simply tap on it.

On the Profile screen, users can find their 8-character token, which needs to be saved as the GU_TOKEN variable on the Gentoo instance. This screen also shows the AES key status, crucial for decrypting the client-side token as it’s encrypted in the database. To log out, tap the Sign Out button.

Note: Since only Anonymous Login is available, once logged out, returning to the same account isn’t possible.

Contacts

Preferred method for getting help or requesting a new feature for both CLI and mobile apps is by creating an issue in Github:

- gentoo_update CLI issues page

- Mobile app issues page

Or just contact me directly via labbrat_social@pm.me and IRC. I am in most of the #gentoo IRC groups and my nick is #LabBrat.

Links

- [Link] – gentoo_update CLI repository

- [Link] – Mobile App repository

Week 11 report on porting Gentoo packages to modern C

2023-08-14 08:30 GSoC (gsoc)

Hello all, hope you’re doing well. This is my week 11 report for my

project “Porting Gentoo’s packages to modern C”

Similar to last two weeks I took up bugs from the tracker randomly and

patched them, sending patch upstream whenever possible. Unfortunately,

nothing new or interesting.

I’ve some open PRs at ::gentoo that I would like to work on and get

reviews on from mentor/s.

This coming week is going to be the last week, so I would like to few more bugs and

start working on wrapping things up. However, I don’t plan on abandoning

my patching work for this week (not even after GSoC) as there is still

lots interesting packages in the tracker.

Till then see yah!

Week 9+10 Report, Automated Gentoo System Updater

2023-08-07 21:24 GSoC (gsoc)

This article is a summary of all the changes made on Automated Gentoo System Updater project during weeks 9 and 10 of GSoC.

Project is hosted on GitHub (gentoo_update and mobile app).

Progress on Weeks 9 and 10

I have finalized app architecture, here are the details:

The app’s main functionality is to receive notification from the push server. For each user, it will create a unique API token after authentication (there is an Anonymous option). This token will be used by gentoo_update to send the encrypted report to the mobile device using a push server endpoint. Update reports will be kept only on the mobile device, ensuring privacy.

After much discussion, I decided to implement app’s backend in Firebase. Since GSoC is organized by Google, it seems appropriate to use their products for this project. However, future plans include the possibility of implementing a self-hosted web server, so that instead of authentication user will just enter server public IP and port.

Example usage will be something like:

- Download the app and sign-in.

- App will generate a token, 1 token per 1 account.

- Save the token into an environmental variable on Gentoo Linux.

- Run

gentoo_update --send-report mobile - Wait until notification arrives on the mobile app.

I have also made some progress on the app’s code. I’ve decided to host it in another repository because it doesn’t require direct access to gentoo_update, and this way it will be easier to manage versions and set up CI/CD.

Splitting tasks for the app into UI and Backend categories was not very efficient in practice, since two are very closely related. Here is what I have done so far:

- Create an app layout

- Set up Firebase backend for the app

- Set up database structure for storing tokens

- Configure anonymous authentication

- UI elements for everything above

Challenges

I’m finding it somewhat challenging to get used to Flutter and design an modern-looking app. My comfort zone lies more in coding backend and automation tasks rather than focusing on the intricacies of UI components. Despite these challenges, I am 60% sure that in end app will look half-decent.

Plans for Week 11

After week 11 I plan to have a mechanism to deliver update reports from a Gentoo Linux machine.

Week 10 report on porting Gentoo packages to modern C

2023-08-06 21:53 GSoC (gsoc)

Hello all, I’m here with my week 10 report of my project “Porting

gentoo’s packages to modern C”

So apart from the usual patching of packages from the tracker the most

significant work done this week is getting GNOME desktop on llvm

profile. But it is to be noted that the packages gui-libs/libhandy,

dev-libs/libgee and sys-libs/libblockdev require gcc fallback

environment. net-dialup/ppp was also on our list but thanks to Sam its

has been patched [0] (and fix sent upstream). I’m pretty sure that

the same work around would work on musl-llvm profile as well. Overall

point being we now have two DEs on llvm profile, GNOME and MATE.

Another thing to note is currently gui-libs/gtk-4.10.4 require

overriding of LD to bfd and OBJCOPY to gnu objcopy, it is a dependency

for gnome 44.3.

Unfortunately, time is not my friend here and I’ve got only two weeks

left. I’ll try fix as many as packages possible in the coming weeks,

starting with the GNOME dependencies.

Meanwhile lot of my upstream patches are merged as well, hope remaining

ones get merged as well, [1][2] to name a few.

Till then, see ya!

[0]: https://github.com/gentoo/gentoo/pull/32198

[1]: https://github.com/CruiserOne/Astrolog/pull/20

[2]: https://github.com/cosmos72/detachtty/pull/6

Weekly report 9, LLVM-libc

2023-08-02 04:32 GSoC (gsoc)

Hi! This week I’ve pretty much finished the work on LLVM/Clang support

for Crossdev and LLVM-libc ebuild(s). I have sent PRs for Crossdev and

related ebuild changes here:

https://github.com/gentoo/crossdev/pull/10

https://github.com/gentoo/gentoo/pull/32136

This PR includes changes for compiler-rt which are always needed for

Clang crossdev, regardless of libc. There are also changes to musl,

kernel-2.eclass (for linux-headers), and a new eclass, cross.eclass.

I made a gentoo.git branch that has LLVM-libc, libc-hdrgen ebuilds and a

gnuconfig patch to support

LLVM-libc. https://github.com/gentoo/gentoo/compare/master…alfredfo:gentoo:gentoo-llvm-libc. I

want to merge Crossdev changes and ebuilds before merging

this. Previously all autotools based projects would fail to configure on

LLVM-libc because there was no gnuconfig entry for it.

I have also solved the problem from last week not being able to compile SCUDO

into LLVM-libc directly. This was caused by two things, 1) LLVM-libc

only checked for compiler-rt in LLVM_ENABLE_PROJECTS, not

LLVM_ENABLE_RUNTIMES which is needed for using “llvm-project/runtimes”

as root source directory (“Runtimes build”).

Fix commit:

https://github.com/llvm/llvm-project/commit/fe9c3c786837de74dc936f8994cd5a53dd8ee708

2) Many compiler-rt configure tests would fail because of LLVM-libc not

supporting dynamic linking, and therefore disable the build of

SCUDO. This was fixed by passing

-DCMAKE_TRY_COMPILE_TARGET_TYPE=STATIC_LIBRARY. So now I no longer need

to manually compile the source files and append object files into

libc.a, yay!

Now I will continue to fix packages for using LLVM-libc Crossdev, or

more likely, add needed functionality into LLVM-libc. I will of course

also fix any comments I get on my PRs.

—

catcream

Week 9 report on porting Gentoo packages to modern C

2023-07-30 21:31 GSoC (gsoc)

Hello all, hope you’re doing well. This is my week 9 report for my

project “Porting Gentoo’s packages to modern C”

Similar to last week, I picked up bugs at random and started submitting

patches. But this time I made sure to check out the upstream and send in

patches whenever possible, if it turned out to be difficult or I

couldn’t find upstream I made sure to make a note about it in the PR

either via commit message or through a separate comment. This way it’ll

help my Sam keep track of things and my progress.

Apart from that nothing new or interesting unfortunately.

Coming next week the plan is the same, pick up more bugs and send in

PRs, both in ::gentoo and upstream whenever possible. I also have some

free time coming week, so plan to make up for lost time during my sick

days in the coming week, as there still lots of packages that require

patching.

I would like to note here, that I made an extra blog post last week

about setting testing environment using lxc and the knowledge about

using gentoo’s stage-3 tarballs to create custom lxc gentoo images. I

don’t really expect anyone following it or using it, mainly put that up

for future reference for myself.

Till then, see ya!

Genkernel in 2023

2023-07-29 20:10 xgqt (xgqt)

I really wanted to look into the new kernel building solutions for Gentoo and maybe migrate to dracut, but last time I tried, ~1.5 years ago, the initreamfs was now working for me.

And now in 2023 I’m still running genkernel for my personal boxes as well as other servers running Gentoo.

I guess some short term solutions really become defined tools :P

So this is how I rebuild my kernel nowadays:

-

Copy old config

1 2

cd /usr/src cp linux-6.1.38-gentoo/.config linux-6.1.41-gentoo/

-

Remove old kernel build directories

1rm -r linux-6.1.31-gentoo

-

Run initial preparation

1( eselect kernel set 1 && cd /usr/src/linux && make olddefconfig )

-

Call genkernel

1 2 3 4 5 6 7 8 9 10 11 12

genkernel \ --no-menuconfig \ --no-clean \ --no-clear-cachedir \ --no-cleanup \ --no-mrproper \ --lvm \ --luks \ --mdadm \ --nfs \ --kernel-localversion="-$(hostname)-$(date '+%Y.%m.%d')" \ all

-

Rebuild the modules

If in your

/etc/genkernel.confyou haveMODULEREBUILDturned off, then also call emerge:1emerge -1 @module-rebuild

Weekly report 8, LLVM libc

2023-07-25 02:21 GSoC (gsoc)

Hi! This (and last week) I’ve spent my time polishing the LLVM/Clang

crossdev work. I have also created ebuilds for llvm-libc, libc-hdrgen

and also the SCUDO allocator. But I will probably bake SCUDO into the

llvm-libc ebuild instead actually.

One thing I have also made is a cross eclass that handles cross

compilation, instead of having the same logic copy-pasted in all

ebuilds. To differentiate a “normal” crossdev package and LLVM/Clang

crossdev I decided to use “cross_llvm-${CTARGET}” as package category

name. This is necessary since you need some way to tell the ebuild about

using LLVM for cross. My initial idea was to handle all this in the

crossdev script, but crossdev ebuilds are self-contained, and you can do

something like “emerge cross_llvm-gentoo-linux-llvm/llvm-libc” and it

will do the right thing without running emerge from crossdev. Hence I

need to handle cross compilation in the ebuilds themselves, using the

eclass. Me and sam are not sure if a new eclass is the right thing to

do but I will continue with it until I get some more thoughts as we can

just inline everything later without wasting any work.

I feel pretty much done now except for baking SCUDO directly into the

llvm-libc ebuild. Actually it is very simple to do but I got some issues

with libstdc++ when using llvm/ as root source directory for the libc

build, which is necessary to use when compiling SCUDO. Previously I used

runtimes/ as root directory, and that worked without issue. Currently to

work around this you can just compile the source files in

llvm-project/compiler-rt/lib/scudo/standalone and append the object

files into libc.a. LLVM libc then just works with crossdev and you

can compile things with the emerge wrapper as usual, but currently a lot

of autotools things break due to me not having specified gnuconfig for

llvm-libc yet.

I had a lot of trouble last week with sonames when doing an aarch64 musl

crossdev setup and running binaries with qemu-user, however it turned

out it was just a warning and it worked after setting LD_LIBRARY_PATH as

envvar to qemu-user. I spent a loong time on this.

Currently I will need to upstream changes to compiler-rt ebuild, musl

llvm-libc ebuild, libc-hdrgen, cross.eclass, and of course crossdev.

Next week I will send the changes upstream for review and continue work

on LLVM libc, most likely simple packages like ed, and then try to get

the missing pieces upstreamed to LLVM libc. fileno() is definitely

needed for ed.

Last week I did not write a blog post as I was in “bug hell” and worked

on a lot of small things at once and thought “if I just finish this I

can write a good report”, and then wednesday came, and I decided to just

do an overview of all my work for this weeks’ blog instead

– —

catcream