RSS-канал «Android Developers Blog»

Доступ к архиву новостей RSS-канала возможен только после подписки.

Как подписчик, вы получите в своё распоряжение бесплатный веб-агрегатор новостей доступный с любого компьютера в котором сможете просматривать и группировать каналы на свой вкус. А, так же, указывать какие из каналов вы захотите читать на вебе, а какие получать по электронной почте.

Подписаться на другой RSS-канал, зная только его адрес или адрес сайта.

Код формы подписки на этот канал для вашего сайта:

Последние новости

How to effectively A/B test power consumption for your Android app’s features

2024-04-17 19:18 Android Developers

Android developers have been telling us they're looking for tools to help optimize power consumption for different devices on Android.

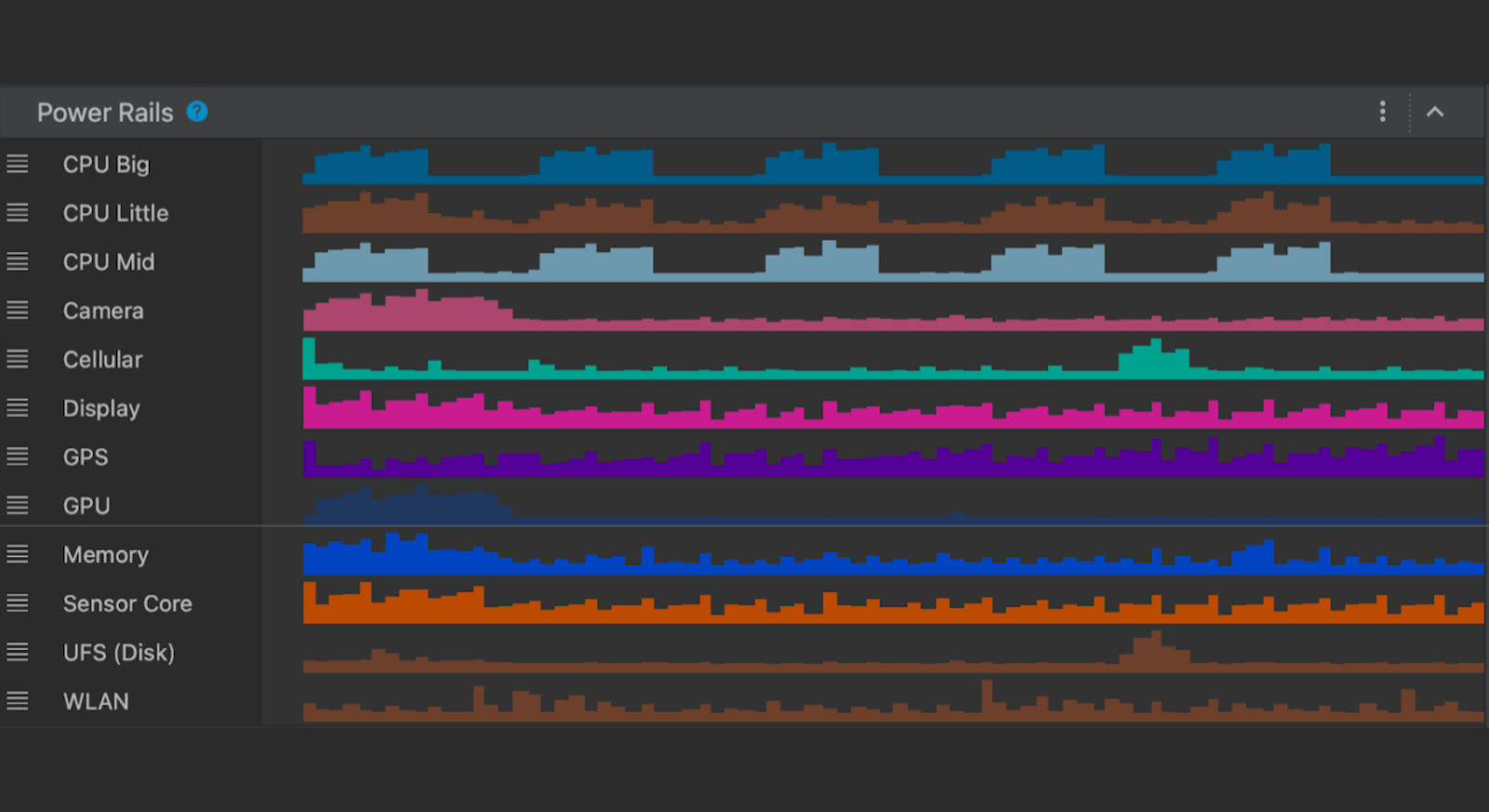

The new Power Profiler in Android Studio helps Android developers by showing power consumption happening on devices as the app is being used. Understanding power consumption across Android devices can help Android developers identify and fix power consumption issues in their apps. They can run A/B tests to compare the power consumption of different algorithms, features or even different versions of their app.

Apps which are optimized for lower power consumption lead to an improved battery and thermal performance of the device, which means an improved user experience on Android.

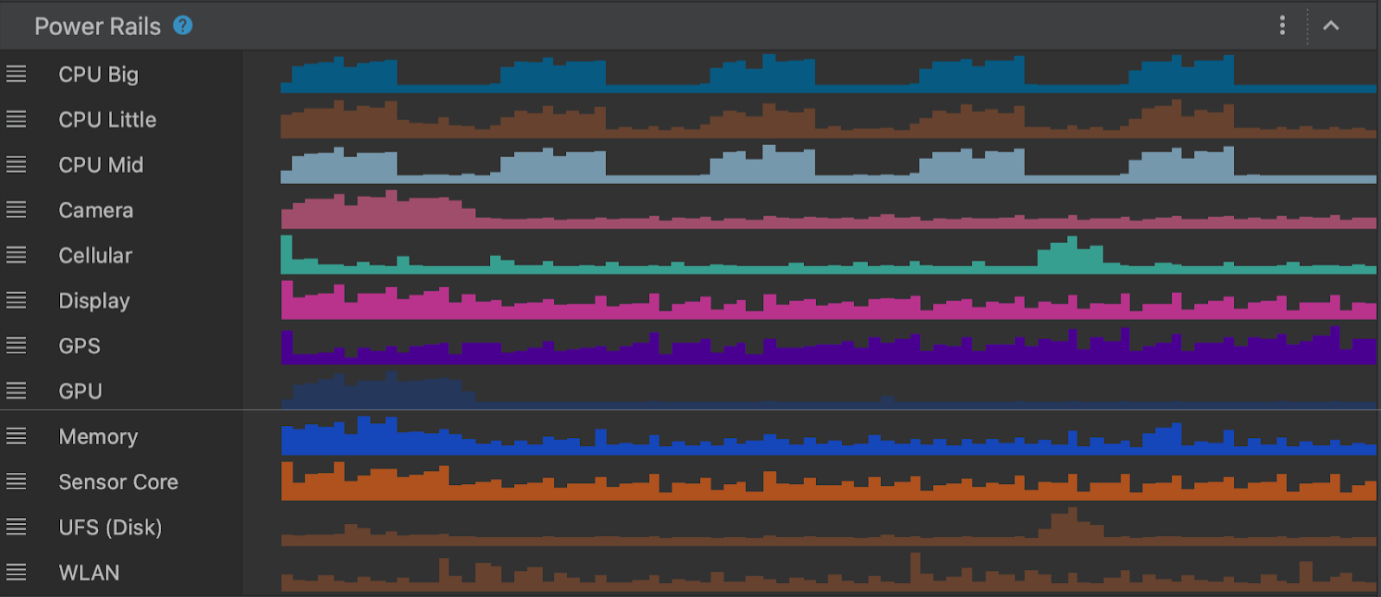

This power consumption data is made available through the On Device Power Monitor (ODPM) on Pixel 6+ devices, segmented by each sub-system called “Power Rails”. See Profileable power rails for a list of supported sub-systems.

The Power Profiler can help app developers detect problems in several areas:

- Detecting unoptimized code that is using more power than necessary.

- Finding background tasks that are causing unnecessary CPU usage.

- Identifying wakelocks that are keeping the device awake when they are not needed.

Once a power consumption issue has been identified, the Power Profiler can be used when testing different hypotheses to understand why the app could be consuming excessive power. For example, if the issue is caused by background tasks, the developer can try to stop the tasks from running unnecessarily or for longer periods. And if the issue is caused by wakelocks, the developer can try to release the wakelocks when the resource is not in use or use them more judiciously. Then compare the power consumption before/after the change using the Power Profiler.

In this blog post, we showcase a technique which uses A/B testing to understand how your app’s power consumption characteristics might change with different versions of the same feature - and how you can effectively measure them.

A real-life example of how the Power Profiler can be used to improve the battery life of an app.

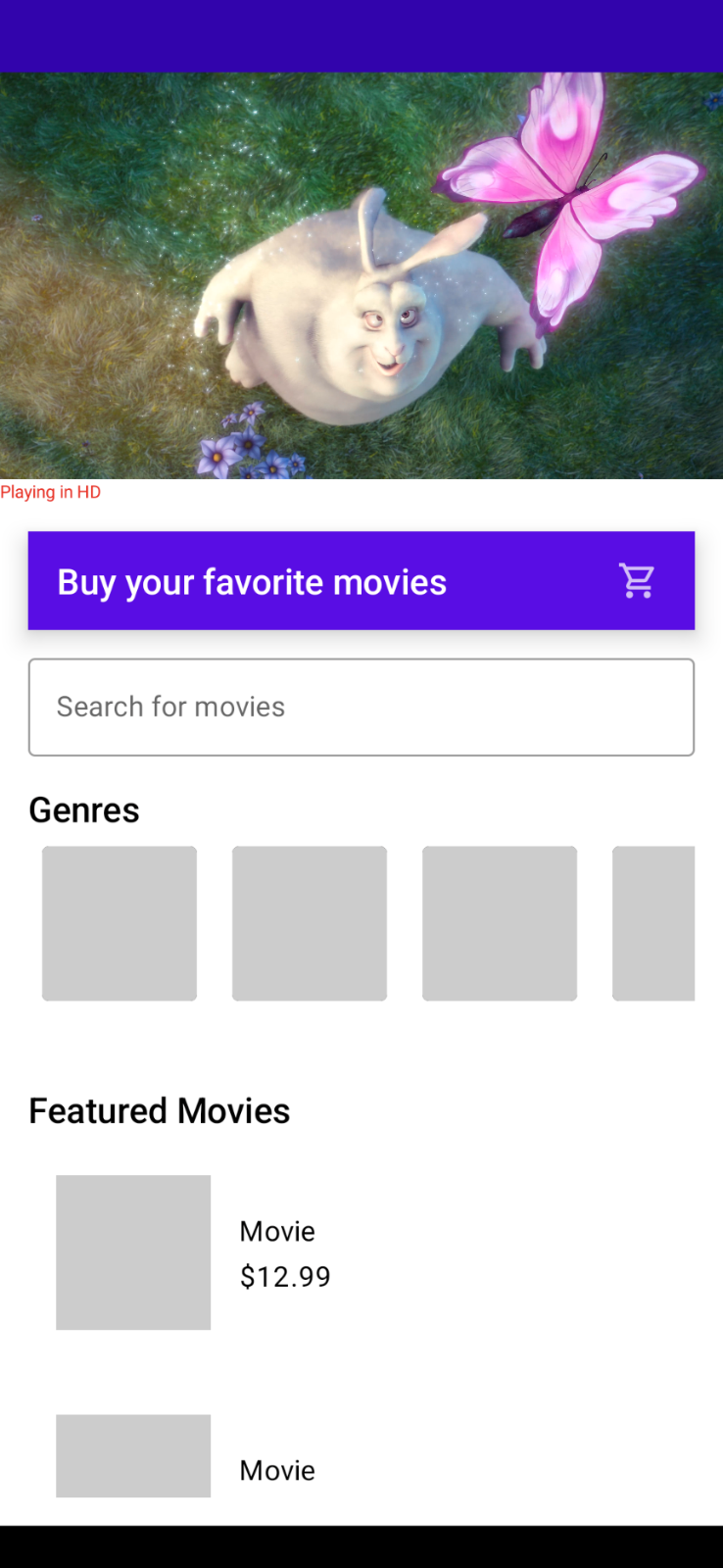

Let’s assume you have an app through which users can purchase their favorite movies.

As your app becomes popular and is used by more users, you realize that a high quality 4K video takes very long to load every time the app is started. Because of its large size, you want to understand its impact on power consumption on the device.

Originally, this video was in 4K quality in the best of intentions, so as to showcase the best possible movie highlights to your customers.

This makes you think…

- Do you really need a 4K video banner on the home screen?

- Does it make sense to load a 4K video over the network every time your app is run?

- How will the power consumption characteristics of your app change if you replace the 4K video with something of lower quality (while still preserving the vivid look & feel of the video)?

This is a perfect scenario to perform an A/B test for power consumption

With an A/B test, you can test two slightly different variations of the video banner feature and choose the one with the better power consumption characteristics.

Scenario A : Run the app with 4K video banner on screen & measure power consumption

Scenario B : Run the app with lower resolution video banner on screen & measure power consumption

A/B Test setup

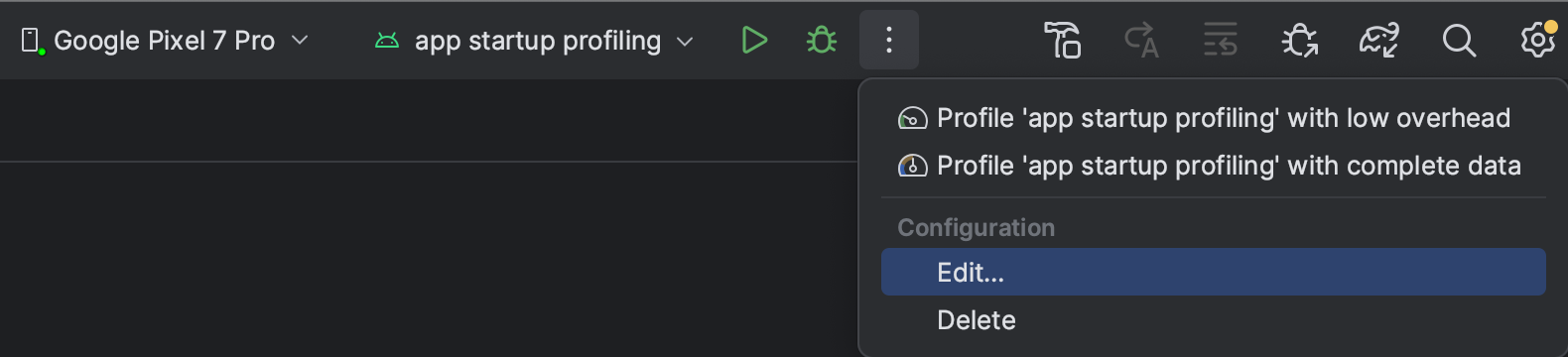

Let's take a moment and set up our Android Studio profiler to run this A/B test. We need to start the app and attach the CPU profiler to it and trigger a system trace (where the Power Profiler will be shown).

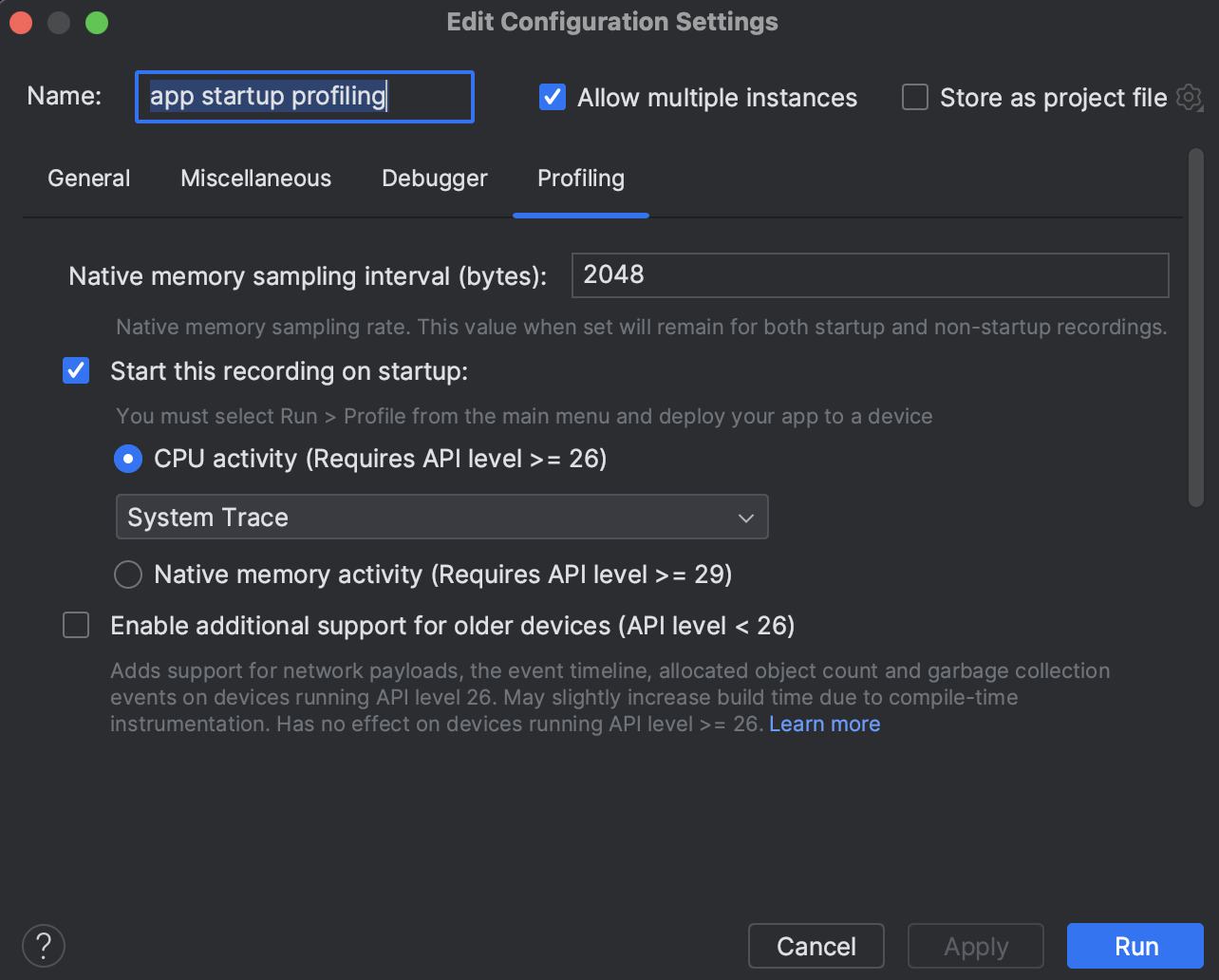

Step 1

Create a custom “Run configuration” by clicking the 3 dot menu > Edit

Step 2

Then select the “Profiling” tab and ensure that “Start this recording on startup” and CPU Activity > System Trace is selected. Then click “Apply”.

Now simply run the “Profile app startup profiling with low overhead” whenever you want to run this app from start and attach the CPU profiler to it.

Note on precision

The following example scenarios use the entire app startup for estimating the power consumption for this blog’s purpose. However you can use more advanced techniques to have even higher precision in getting power readings. Some techniques to try are:

- Isolate and measure power consumption for video playback only after a tap event on the video player

- Use the trace markers API to mark the start and stop time for power measurement timeline - and then only measure power consumption within that marked window

Scenario A

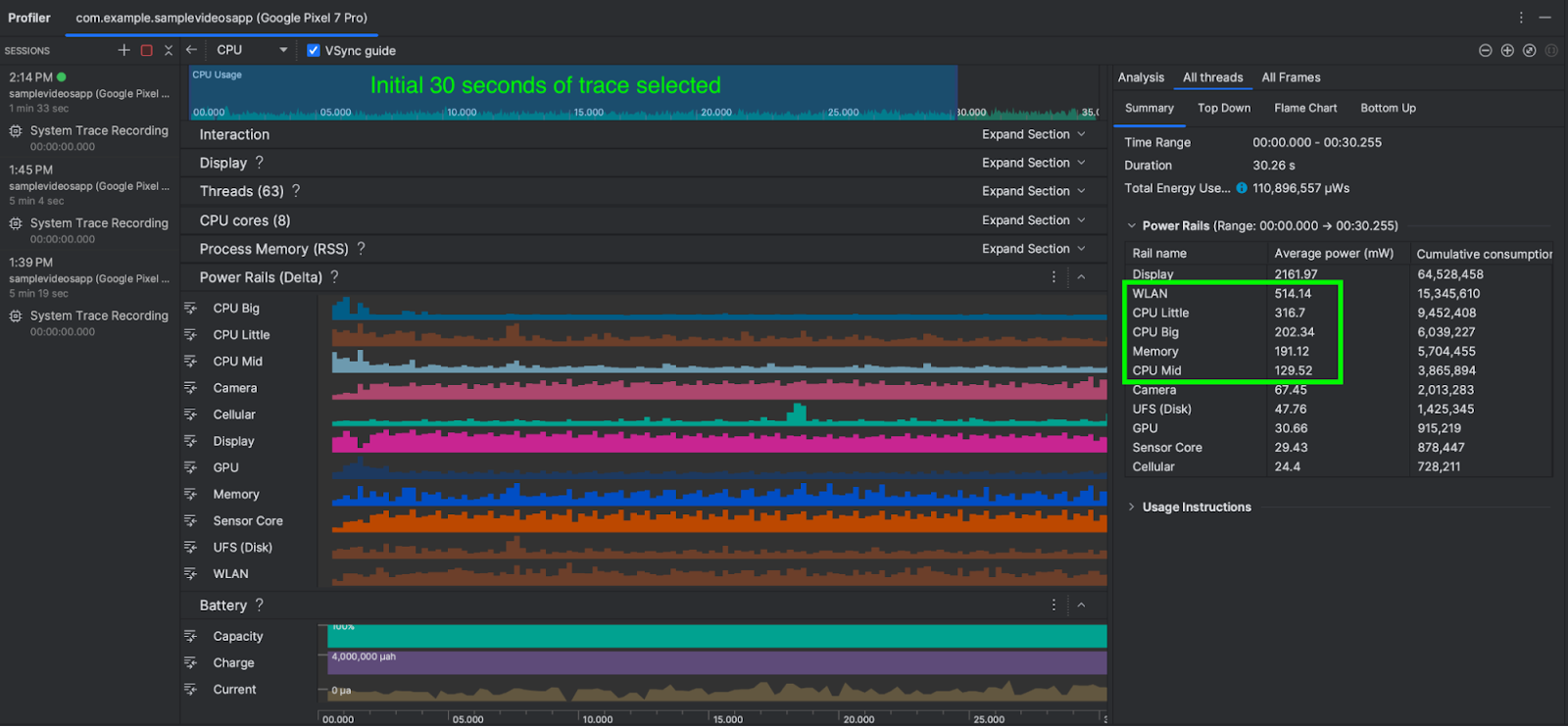

In this scenario, we run the app with 4K video playing and measure power consumption for the first 30 seconds. We can optionally also run the scenario A multiple times and average out the readings. Once the System trace is shown in Android Studio, select the 0-30 second time range from the timeline selection panel and record as a screenshot for comparing against scenario B

As you can see, the average power consumed by WLAN, CPU cores & Memory combined is about 1,352 mW (milliwatts)

Now let's compare and contrast how this power consumption changes in Scenario B

Scenario B

In this scenario, we run the app with low quality video playing and measure power consumption for the first 30 seconds. As before, we can also optionally run scenario B multiple times and average out the power consumption readings. Again, once the System trace is shown in Android Studio, select the 0-30 second time range from the timeline selection panel.

The total power consumed by WLAN, CPU Little, CPU Big and CPU Mid & Memory is about 741 mW (milliwatts)

Conclusion

All else being equal, Scenario B (with lower quality video) consumed 741 mW power as compared to Scenario A (with 4K video) which required 1,352 mW power.

Scenario B (lower quality video) took 45% less power than Scenario A (4K) - while the lower quality video provides little to no visual difference in perceived quality of the app’s screen.

As a result of this A/B test for power consumption, you conclude that replacing the 4K video with a lower quality video on our app’s home screen not only reduces power consumption by 45%, also reduces the required network bandwidth and can potentially also improve the thermal performance of the devices.

If your app’s business logic still requires the 4K video to be shown on the app’s screen, you can explore strategies like:

- Caching the 4K video across subsequent runs of the app.

- Loading video on a user tap.

- Loading an image initially and only load the video after the screen has fully rendered (delayed loading).

The overall power consumption numbers presented in the above A/B test scenario might seem small, but it shows the techniques that app developers can use to effectively A/B test power consumption for their app’s features using the Power Profiler in Android Studio.

Next Steps

The new Power Profiler is available in Android Studio Hedgehog onwards. To know more, please head over to the official documentation.

The First Beta of Android 15

2024-04-11 23:21 Android Developers

Today we're releasing the first beta of Android 15. With the progress we've made refining the features and stability of Android 15, it's time to open the experience up to both developers and early adopters, so you can now enroll any supported Pixel device here to get this and future Android 15 Beta and feature drop Beta updates over-the-air.

Android 15 continues our work to build a platform that helps improve your productivity, give users a premium app experience, protect user privacy and security, and make your app accessible to as many people as possible — all in a vibrant and diverse ecosystem of devices, silicon partners, and carriers.

Android delivers enhancements and new features year-round, and your feedback on the Android beta program plays a key role in helping Android continuously improve. The Android 15 developer site has lots more information about the beta, including downloads for Pixel and the release timeline. We’re looking forward to hearing what you think, and thank you in advance for your continued help in making Android a platform that works for everyone.

We’ll have lots more to share as we move through the release cycle, and be sure to tune into Google I/O where you can dive deeper into topics that interest you with over 100 sessions, workshops, codelabs, and demos.

Edge-to-edge

Apps targeting Android 15 are displayed edge-to-edge by default on Android 15 devices. This means that apps no longer need to explicitly call Window.setDecorFitsSystemWindows(false) or enableEdgeToEdge() to show their content behind the system bars, although we recommend continuing to call enableEdgeToEdge() to get the edge-to-edge experience on earlier Android releases.

To assist your app with going edge-to-edge, many of the Material 3 composables handle insets for you, based on how the composables are placed in your app according to the Material specifications.

.gif)

The system bars are transparent or translucent and content will draw behind by default. Refer to "Handle overlaps using insets" (Views) or Window insets in Compose to see how to prevent important touch targets from being hidden by the system bars.

Smoother NFC experiences - part 2

Android 15 is working to make the tap to pay experience more seamless and reliable while continuing to support Android's robust NFC app ecosystem. In addition to the observe mode changes from Android 15 developer preview 2, apps can now register a fingerprint on supported devices so they can be notified of polling loop activity, which allows for smooth operation with multiple NFC-aware applications.

Inter-character justification

Starting with Android 15, text can be justified utilizing letter spacing by using JUSTIFICATION_MODE_INTER_CHARACTER. Inter-word justification was first introduced in Android O, but inter-character solves for languages that use the white space for segmentation, e.g. Chinese, Japanese, etc.

App archiving

Android and Google Play announced support for app archiving last year, allowing users to free up space by partially removing infrequently used apps from the device that were published using Android App Bundle on Google Play. Android 15 now includes OS level support for app archiving and unarchiving, making it easier for all app stores to implement it.

Apps with the REQUEST_DELETE_PACKAGES permission can call the PackageInstaller requestArchive method to request archiving a currently installed app package, which removes the APK and any cached files, but persists user data. Archived apps are returned as displayable apps through the LauncherApps APIs; users will see a UI treatment to highlight that those apps are archived. If a user taps on an archived app, the responsible installer will get a request to unarchive it, and the restoration process can be monitored by the ACTION_PACKAGE_ADDED broadcast.

App-managed profiling

Android 15 includes the all new ProfilingManager class, which allows you to collect profiling information from within your app. We're planning to wrap this with an Android Jetpack API that will simplify construction of profiling requests, but the core API will allow the collection of heap dumps, heap profiles, stack sampling, and more. It provides a callback to your app with a supplied tag to identify the output file, which is delivered to your app's files directory. The API does rate limiting to minimize the performance impact.

Better Braille

In Android 15, we've made it possible for TalkBack to support Braille displays that are using the HID standard over both USB and secure Bluetooth.

This standard, much like the one used by mice and keyboards, will help Android support a wider range of Braille displays over time.

Key management for end-to-end encryption

We are introducing the E2eeContactKeysManager in Android 15, which facilitates end-to-end encryption (E2EE) in your Android apps by providing an OS-level API for the storage of cryptographic public keys.

The E2eeContactKeysManager is designed to integrate with the platform contacts app to give users a centralized way to manage and verify their contacts' public keys.

Secured background activity launches

Android 15 brings additional changes to prevent malicious background apps from bringing other apps to the foreground, elevating their privileges, and abusing user interaction, aiming to protect users from malicious apps and give them more control over their devices. Background activity launches have been restricted since Android 10.

App compatibility

With Android 15 now in beta, we're opening up access to early-adopter users as well as developers, so if you haven't yet tested your app for compatibility with Android 15, now is the time to do it. In the weeks ahead, you can expect more users to try your app on Android 15 and raise issues they find.

To test for compatibility, install your published app on a device or emulator running Android 15 beta and work through all of your app's flows. Review the behavior changes to focus your testing. After you've resolved any issues, publish an update as soon as possible.

To give you more time to plan for app compatibility work, we’re letting you know our Platform Stability milestone well in advance.

At this milestone, we’ll deliver final SDK/NDK APIs and also final internal APIs and app-facing system behaviors. We’re expecting to reach Platform Stability in June 2024, and from that time you’ll have several months before the official release to do your final testing. The release timeline details are here.

Get started with Android 15

Today's beta release has everything you need to try the Android 15 features, test your apps, and give us feedback. Now that we've entered the beta phase, you can enroll any supported Pixel device here to get this and future Android Beta updates over-the-air. If you don’t have a Pixel device, you can use the 64-bit system images with the Android Emulator in Android Studio. If you're already in the Android 14 QPR beta program on a supported device, or have installed the developer preview, you'll automatically get updated to Android 15 Beta 1.

For the best development experience with Android 15, we recommend that you use the latest version of Android Studio Jellyfish (or more recent Jellyfish+ versions). Once you’re set up, here are some of the things you should do:

- Try the new features and APIs - your feedback is critical during the early part of the developer preview and beta program. Report issues in our tracker on the feedback page.

- Test your current app for compatibility - learn whether your app is affected by changes in Android 15; install your app onto a device or emulator running Android 15 and extensively test it.

We’ll update the beta system images and SDK regularly throughout the Android 15 release cycle. Read more here.

For complete information, visit the Android 15 developer site.

Java and OpenJDK are trademarks or registered trademarks of Oracle and/or its affiliates.

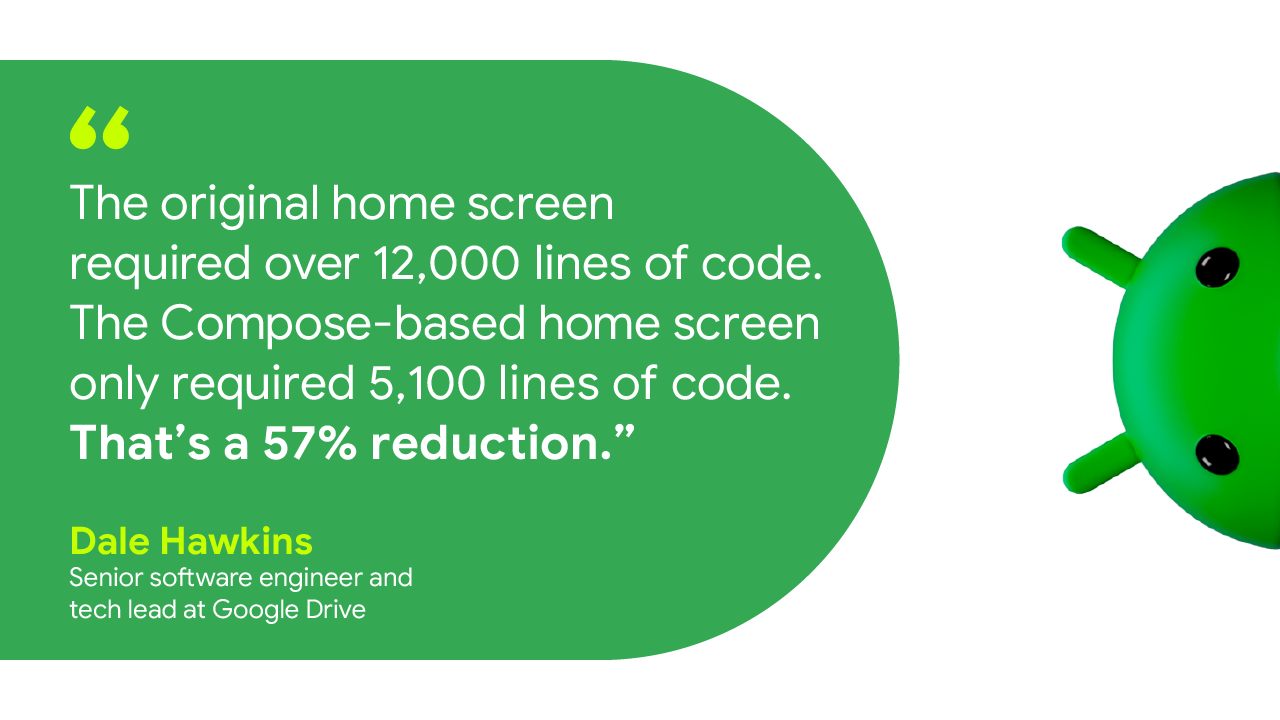

Google Drive cut code and development time in half with Jetpack Compose and new architecture As one of the world’s most popular cloud-based storage services, Google Drive lets people do more than just store their files online. With Drive, users can synchronize, share, search, edit, and even pin specified files and content for safe and secure offline use. Recently, Drive’s developers revamped the application’s home screen to provide a more seamless experience across devices, matching updates made to Google Drive’s web version. However, the app’s previous architecture and codebase would’ve prevented the team from completing the updates in a timely manner. Instead of struggling with the app’s previous tech stack to implement the update, the Drive team rebuilt the home page from the ground up using Android’s recommended architecture and Jetpack Compose, Android’s modern declarative toolkit for creating native UI. The Drive team experimented with Kotlin — which the Compose toolkit is built with — for several months before planning the app’s home screen rebuild. Drive’s developers liked Kotlin’s improved syntax and null enforcement, making it easier to produce code. “We had been using RxJava, but started looking into replacing that with coroutines,” said Dale Hawkins, the features team lead for Google Drive. “This led to a more natural alignment between coroutines and Jetpack Compose. After a deep dive into Compose, we came away with a clear understanding of how Compose has numerous benefits over the Views-based approach.” Following the Kotlin exploration, Dale experimented with Jetpack Compose. “I was pleased with how easy it was to build the UI using Compose. So I continued the experiment after that week,” said Dale. “I eventually rewrote the feature using Compose.” Shortly after experimenting with Jetpack Compose, the Drive team decided to use it to completely rebuild the app’s home screen UI. “We wanted to make some major changes to match the ones being done for the web version, but that project had a several-month head start. We wanted to release the Android version shortly after the web changes went live to ensure our users have a seamless Google Drive experience across devices,” said Dale. The Drive team's experimentation and testing with Jetpack Compose showed that the new toolkit was powerful and reliable and that it would enable them to move faster. With this in mind, the Drive team decided to step away from their old codebase and embrace Jetpack Compose for the app’s home screen update. Not only would it be quicker and easier, but it would also better prepare the team to easily make future UI changes. Before going all-in with Jetpack Compose, Drive developers wanted to restructure the application by implementing a completely new app architecture. Drive developers followed Android’s official architecture guidance to apply structural changes, paving the way for the new Kotlin codebase. “The recommended architecture reinforces good separation between layers,” said Quintin Knudsen, an Android engineer for Google Drive. “We work in a highly dynamic environment and need to be able to adjust to any app changes. Using well-defined and independent layers helps isolate any changes or UI requirements. The recommendations from Android offered sound ways to structure the layers.” With a clear separation between the app’s data and UI layers, developers could work in parallel to significantly speed up testing and development. Drive developers also relied on Mappers and UseCases when creating the new architecture. These patterns allowed them to create flexible code that is easier to manage. They also exposed flows from their ViewModels to make the UI respond immediately to any data changes, making it much simpler to implement and understand UI updates. With the app’s newly improved architecture and Jetpack Compose, the Drive team was able to develop the app’s new home screen in less than half the time that they expected. They also implemented the new code and finished quality assurance testing nearly seven weeks ahead of schedule. “Thanks to Compose, we had the groundwork done within a couple of weeks. We delivered a great implementation over a month ahead of schedule, and it’s been praised by product, UX, and even other engineering teams,” said Dale. Despite having fewer features, the original home screen required over 12,000 lines of code. The new Compose-based home screen has many new features and only required 5,100 lines of code—a 57% reduction. Having less code makes it much easier for developers to maintain the app and implement any updates. Testing the new UI in Jetpack Compose also required significantly less code. Before Compose, Drive developers used roughly 9,000 lines of code to test about 62% of the UI. With Compose, it took only 2,200 lines to test over 80% of the new UI. A new and improved app architecture paired with Jetpack Compose allowed Drive developers to rebuild the app’s home screen UI faster and easier than they could’ve imagined. The Drive team plans to expand its use of Compose within the application for things like supporting large dynamic displays and text resizing. “As we work on new projects, we’re taking the opportunity to update older UI code to make use of our new architecture and Compose. The new code will be objectively better and features will be easier to write, test, and maintain,” said Dale. Improve app architecture using Android’s official architecture guidance and optimize your UI development with Jetpack Compose.

2024-04-10 18:05 Android Developers

Experimenting with Kotlin and Compose

Using Compose

Using Android’s guidance for architecture

Less code, faster development

Looking forward

Get started

Android Studio uses Gemini Pro to make Android development faster and easier

2024-04-08 20:00 Android Developers

As part of the next chapter of our Gemini era, we announced we were bringing Gemini to more products. Today we’re excited to announce that Android Studio is using the Gemini 1.0 Pro model to make Android development faster and easier, and we’ve seen significant improvements in response quality over the last several months through our internal testing. In addition, we are making this transition more apparent by announcing that Studio Bot is now called Gemini in Android Studio.

Gemini in Android Studio is an AI-powered coding assistant which can be accessed directly in the IDE. It can accelerate your ability to develop high-quality Android apps faster by helping generate code for your app, providing complex code completions, answering your questions, finding relevant resources, adding code comments and more — all without ever having to leave Android Studio. It is available in 180+ countries and territories in Android Studio Jellyfish.

If you were already using Studio Bot in the canary channel, you’ll continue experiencing the same helpful and powerful features, but you’ll notice improved quality in responses compared to earlier versions.

Ask Gemini your Android development questions

Gemini in Android Studio can understand natural language, so you can ask development questions in your own words. You can enter your questions in the chat window ranging from very simple and open-ended ones to specific problems that you need help with.

Here are some examples of the types of queries it can answer:

- How do I add camera support to my app?

- Using Compose, I need a login screen with the following: a username field, a password field, a 'Sign In' button, a 'Forgot Password?' link. I want the password field to obscure the input.

- What's the best way to get location on Android?

- I have an 'orders' table with columns like 'order_id', 'customer_id', 'product_id', 'price', and 'order_date'. Can you help me write a query that calculates the average order value per customer over the last month?

Gemini in Android Studio remembers the context of the conversation, so you can also ask follow-up questions, such as “Can you give me the code for this in Kotlin?” or “Can you show me how to do it in Compose?”

Code faster with AI powered Code Completions

Gemini in Android Studio can help you be more productive by providing you with powerful AI code completions. You can receive suggestions of multi-line code completions, suggestions for how to do comments for your code, or how to add documentation to your code.

Designed with privacy in mind

Gemini in Android Studio was designed with privacy in mind. Gemini is only available after you log in and enable it. You don’t need to send your code context to take advantage of most features. By default, Gemini in Android Studio’s chat responses are purely based on conversation history, and you control whether you want to share additional context for customized responses. You can update this anytime in Android Studio > Settings at a granular project level. We also have a custom way for you to opt out certain files and folders through an .aiexclude file. Much like our work on other AI projects, we stick to a set of AI Principles that hold us accountable. Learn more here.

Build a Generative AI app using the Gemini API starter template

Not only does Android Studio use Gemini to help you be more productive, it can also help you take advantage of Gemini models to create AI-powered features in your applications. Get started in minutes using the Gemini API starter template available in the canary release – channel for Android Studio – under File > New Project > Gemini API Starter. You can also use the code sample available at File > Import Sample > Google Generative AI sample.

The Gemini API is multimodal, meaning it can support image and text inputs. For example, it can support conversational chat, summarization, translation, caption generation etc. using both text and image inputs.

Try Gemini in Android Studio

Gemini in Android Studio is still in preview, but we have added many feature improvements — and now a major model update — since we released the experience in May 2023. It is currently no-cost for developers to try out. Now is a great time to test it and let us know what you think, before we release this experience to stable.

Stay updated on the latest by following us on LinkedIn, Medium, YouTube, or X. Let's build the future of Android apps together!

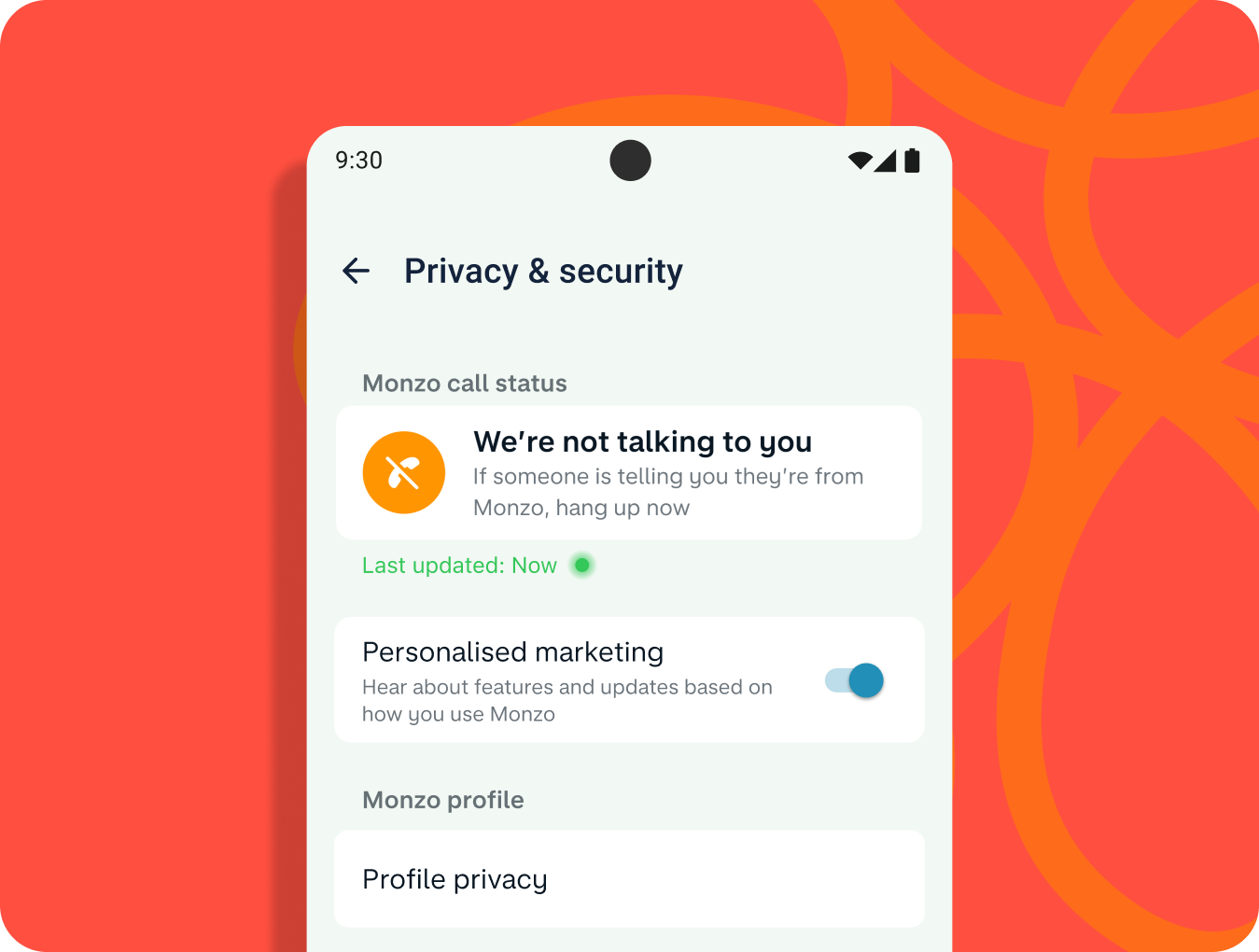

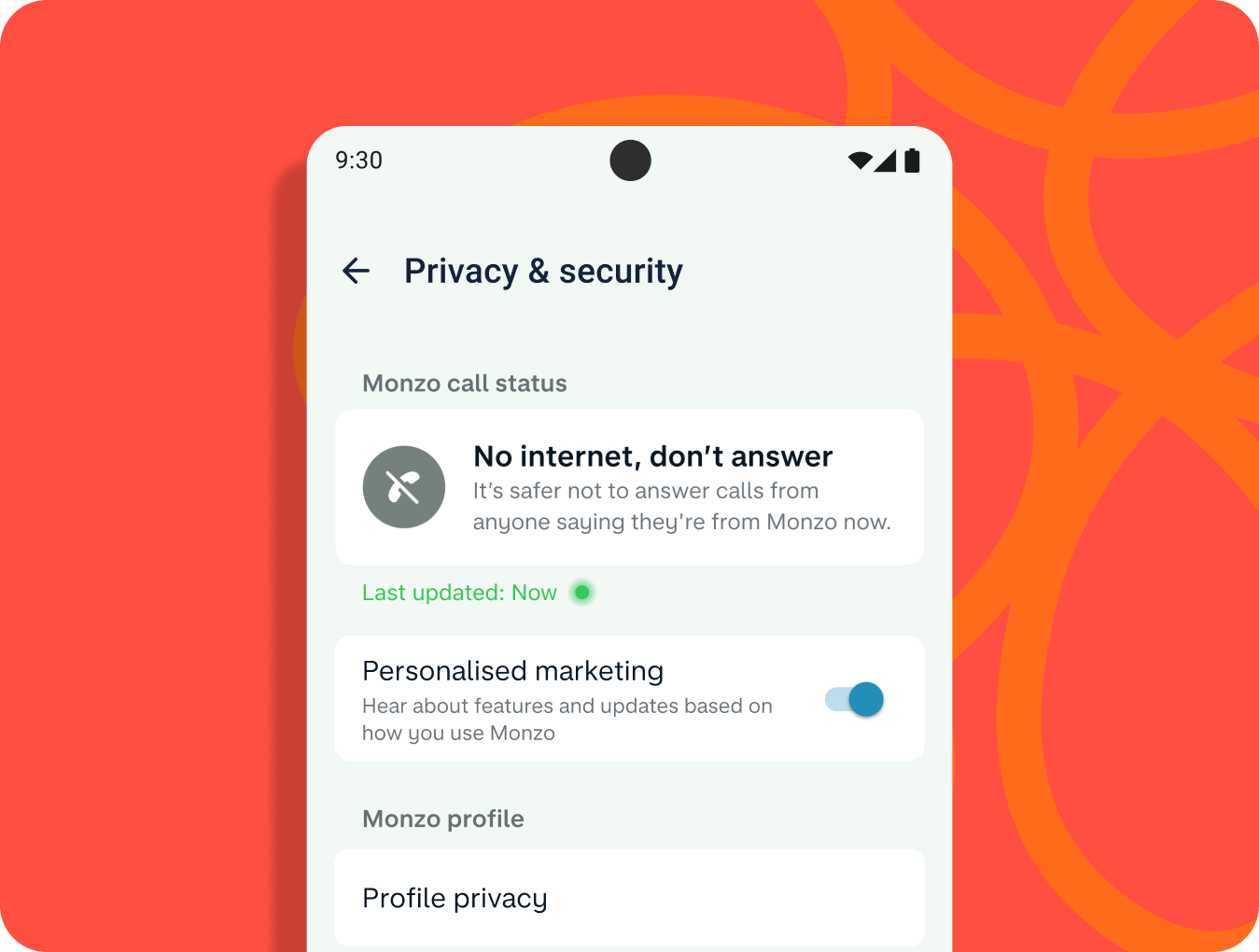

Battling Impersonation Scams: Monzo’s Innovative Approach Cybercriminals continue to invest in advanced financial fraud scams, costing consumers more than $1 trillion in losses. According to the 2023 Global State of Scams Report by the Global Anti-Scam Alliance, 78 percent of mobile users surveyed experienced at least one scam in the last year. Of those surveyed, 45 percent said they’re experiencing more scams in the last 12 months. The Global Scam Report also found that phone calls are the top method to initiate a scam. Scammers frequently employ social engineering tactics to deceive mobile users. The key place these scammers want individuals to take action are in the tools that give access to their money. This means financial services are frequently targeted. As cybercriminals push forward with more scams, and their reach extends globally, it’s important to innovate in the response. One such innovator is Monzo, who have been able to tackle scam calls through a unique impersonation detection feature in their app. Founded in 2015, Monzo is the largest digital bank in the UK with presence in the US as well. Their mission is to make money work for everyone with an ambition to become the one app customers turn to to manage their entire financial lives. Impersonation fraud is an issue that the entire industry is grappling with and Monzo decided to take action and introduce an industry-first tool. An impersonation scam is a very common social engineering tactic when a criminal pretends to be someone else so they can encourage you to send them money. These scams often involve using urgent pretenses that involve a risk to a user’s finances or an opportunity for quick wealth. With this pressure, fraudsters convince users to disable security safeguards and ignore proactive warnings for potential malware, scams, and phishing. Android offers multiple layers of spam and phishing protection for users including call ID and spam protection in the Phone by Google app. Monzo’s team wanted to enhance that protection by leveraging their in-house telephone systems. By integrating with their mobile application infrastructure they could help their customers confirm in real time when they’re actually talking to a member of Monzo’s customer support team in a privacy preserving way. If someone calls a Monzo customer stating they are from the bank, their users can go into the app to verify this. In the Monzo app’s Privacy & Security section, users can see the ‘Monzo Call Status’, letting them know if there is an active call ongoing with an actual Monzo team member. - Priyesh Patel, Senior Staff Engineer, Monzo’s Security team If a user is not talking to a member of Monzo’s customer support team they will see that as well as some helpful information. If the ‘Monzo call status’ is showing that you are not speaking to Monzo, the call status feature tells you to hang up right away and report it to their team. Their customers can start a scam report directly from the call status feature in the app. If a genuine call is ongoing the customer will see the information. Monzo has integrated a few systems together to help inform their customers. A cross functional team was put together to build a solution. Monzo’s in-house technology stack meant that the systems that power their app and customer service phone calls can easily communicate with one another. This allowed them to link the two and share details of customer service calls with their app, accurately and in real-time. The team then worked to identify edge cases, like when the user is offline. In this situation Monzo recommends that customers don’t speak to anyone claiming they’re from Monzo until you’re connected to the internet again and can check the call status within the app. The feature has proven highly effective in safeguarding customers, and received universal praise from industry experts and consumer champions. - Priyesh Patel, Senior Staff Engineer, Monzo’s Security team Monzo continues to invest and innovate in fraud prevention. The call status feature brings together both technological innovation and customer education to achieve its success, and gives their customers a way to catch scammers in action. A layered security approach is a great way to protect users. Android and Google Play provide layers like app sandboxing, Google Play Protect, and privacy preserving permissions, and Monzo has built an additional one in a privacy-preserving way. To learn more about Android and Play’s protections and to further protect your app check out these resources:

2024-03-29 19:00 Android Developers

Monzo’s Innovative Approach

Call Status Feature

“We’ve built this industry-first feature using our world-class tech to provide an additional layer of comfort and security. Our hope is that this could stop instances of impersonation scams for Monzo customers from happening in the first place and impacting customers.”

Keeping Customers Informed

How does it work?

Results and Next Steps

“Since we launched Call Status, we receive an average of around 700 reports of suspected fraud from our customers through the feature per month.

Now that it’s live and helping protect customers, we’re always looking for ways to improve Call Status - like making it more visible and easier to find if you’re on a call and you want to quickly check that who you’re speaking to is who they say they are.”

Final Advice

#WeArePlay | Meet the founders changing women's lives: Women’s History Month Stories

2024-03-25 20:11 Android Developers

In celebration of Women’s History month, we’re celebrating the founders behind groundbreaking apps and games from around the world - made by women or for women. Let's discover four of my favorites in this latest batch of nine #WeArePlay stories.

Múkami Kinoti Kimotho

Royelles Revolution / Royelles Revolution: Gaming For Girls (USA)

Múkami's journey began when she noticed the lack of representation for girls in the gaming industry. Determined to change this narrative, she created Royelles, a game designed to inspire girls and non-binary people to pursue careers in STEAM (science, technology, engineering, art, math) fields. The game is anchored in fierce female avatars like the real life NASA scientist Mara who voices a character. Royelles is revolutionizing the gaming landscape and empowering the next generation of innovators. Múkami's excited to release more gamified stories and learning modules, and a range of extended reality and AI-powered avatars based on the game’s characters.

"If we're going to effectively educate Gen Z and Gen Alpha, we have to meet them in the metaverse and leverage gamified play as a means of driving education, awareness, inspiration and empowerment.”- Múkami

Leonika Sari Njoto Boedioetomo

Reblood: Blood Services App (Indonesia)

When her university friend needed an urgent blood transfusion but discovered there was none available in the blood bank, Leonika became aware of the blood donation shortage in Indonesia. Her mission to address this led her to create Reblood, an app connecting blood donors with those in need. With over 140,000 blood donations facilitated to date, Reblood is not only saving lives but also promoting healthier lifestyles with a recently added feature that allows people to find the most affordable medical checkups.

“Our goal is to save more lives by raising awareness of blood donation in Indonesia and promoting healthier lifestyles for blood donors.”- Leonika

Luciane Antunes dos Santos and Renato Hélio Rauber

CARSUL / Car Sul: Urban Mobility App (Brazil)

Luciane was devastated when she lost her son in a car accident. Her and her husband Renato's loss led them to develop Carsul, an urban mobility app prioritizing safety and security. By providing safe transportation options and partnering with government health programs to chauffeur patients long distances to larger hospitals, Carsul is not only preventing accidents but also saving lives. Luciane and Renato's dedication to protecting others from the pain they've experienced is ongoing and they plan to expand to more cities in Brazil.

“Carsul was born from this story of loss, inspiring me to protect other lives. Redefining myself in this way is very rewarding.”- Luciane

Diariata (Diata) N'Diaye

Resonantes / App-Elles: Safety App for Women (France)

After hearing the stories of young people who had experienced abuse that was similar to her own, Spoken word artist Diata developed App-Elles – an app that allows women to send alerts when they're in danger. By connecting users with support networks and professional services, App-Elles is empowering women to reclaim their safety and seek help when needed.Diata also runs writing and recording workshops to help victims overcome their experiences with violence and has plans to expand her app with the introduction of a discreet wearable that sends out alerts.

“I realized from my work on the ground that there were victims of violence who needed help and support systems. This was my inspiration to create App-Elles."- Diata

Discover more #WeArePlay stories and share your favorites.

Posted by Mayank Jain - Product Manager, and Yasser Dbeis - Software Engineer; Android Studio

Posted by Mayank Jain - Product Manager, and Yasser Dbeis - Software Engineer; Android Studio

Posted by

Posted by

Posted by Todd Burner – Developer Relations Engineer

Posted by Todd Burner – Developer Relations Engineer

Posted by Leticia Lago – Developer Marketing

Posted by Leticia Lago – Developer Marketing